Step up your coding game with AI-powered Code Explainer. Get insights like never before!

In the world of computer science, there's an age-old saying that’s always been adhered to: 'Garbage in, garbage out.' This nugget of wisdom hasn't lost its sheen in today's data-centric reality. If anything, it's become even more relevant. The fact of the matter is, without well-structured, pristine-quality data at your disposal, even the most innovative machine learning models will be destined to fall flat on their faces.

The magic happens in the world of data preprocessing, an absolutely vital stage where raw data is prepped and primed for analysis. It undergoes a makeover, so to speak, being cleaned, reshaped, and reformatted into a shape that's ready for analytical dissection. This refinement process allows the veiled patterns and hidden trends to step out of the shadows and into the spotlight. Therefore, prior to letting your data interact with a machine learning algorithm, you have to don the hat of a data janitor, diligently scrubbing, harmonizing, and cherry-picking features that will optimize the model's learning process.

Now here comes the silver lining - Python. Known for its user-friendly nature and extensive range of libraries, Python is a strong ally when it comes to executing data preprocessing efficiently. In this blog, we will guide you through the labyrinth of data preprocessing with Python, in five key stages. Whether you're an aspiring data analyst or venturing into the realm of machine learning, this step-by-step process should help you along the way.

Importing Necessary Libraries

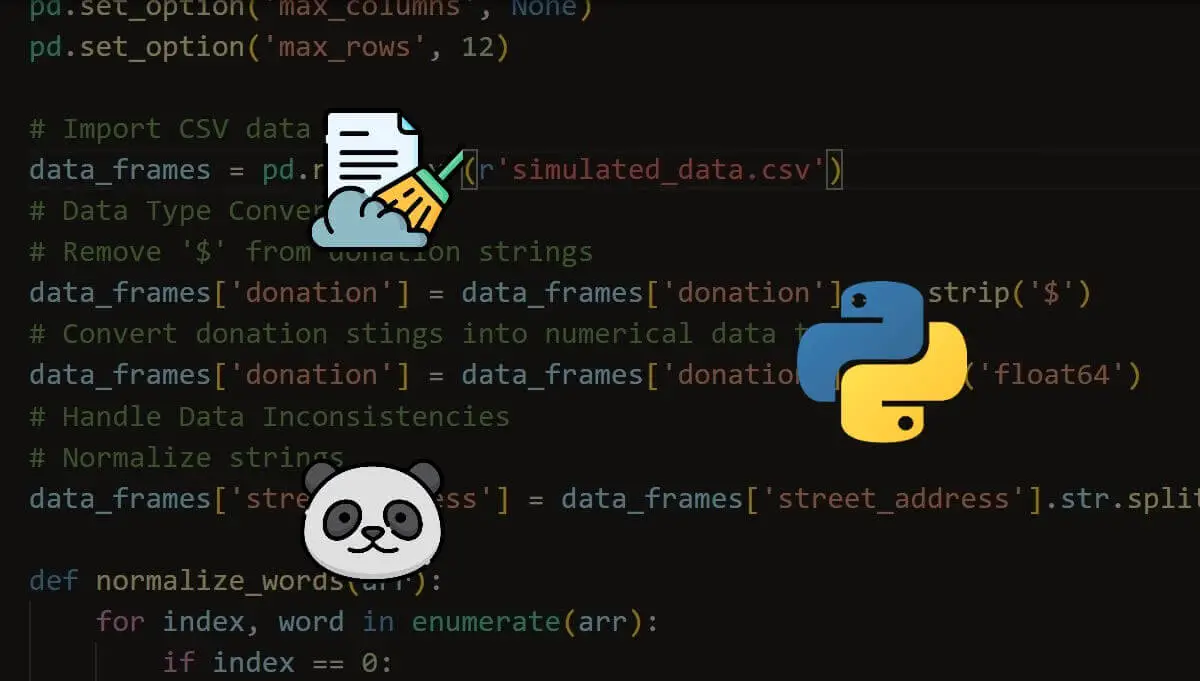

Before we can start digging into datasets, we need to equip ourselves with Python's data preprocessing tools. The good news is that Python has a fantastic ecosystem of libraries that will make our data cleaning and wrangling much easier. In this step, we'll import three essential packages - Pandas, NumPy, and scikit-learn.

Pandas is the bread and butter for working with tabular data in Python. It provides easy-to-use data structures and functions for exploring, manipulating, and analyzing datasets. NumPy supplements Pandas by adding support for fast mathematical operations on arrays. And scikit-learn contains a wealth of tools for data preprocessing and machine learning.

To import these libraries, simply run the following code:

import pandas as pd

import numpy as np

from sklearn import preprocessingNow we have the full power of Pandas, NumPy, and scikit-learn at our fingertips. With this utility belt equipped, we can start wielding these tools to whip our datasets into shape.

Data Loading and Understanding

Now that our preprocessing libraries are imported, it's time to load our raw data and start getting familiar with it. In doing so, one might find it useful to explore the Python Dash vs Streamlit comparison. Both these platforms offer powerful capabilities for data loading and exploration, making it easier to understand the intricacies of your dataset.

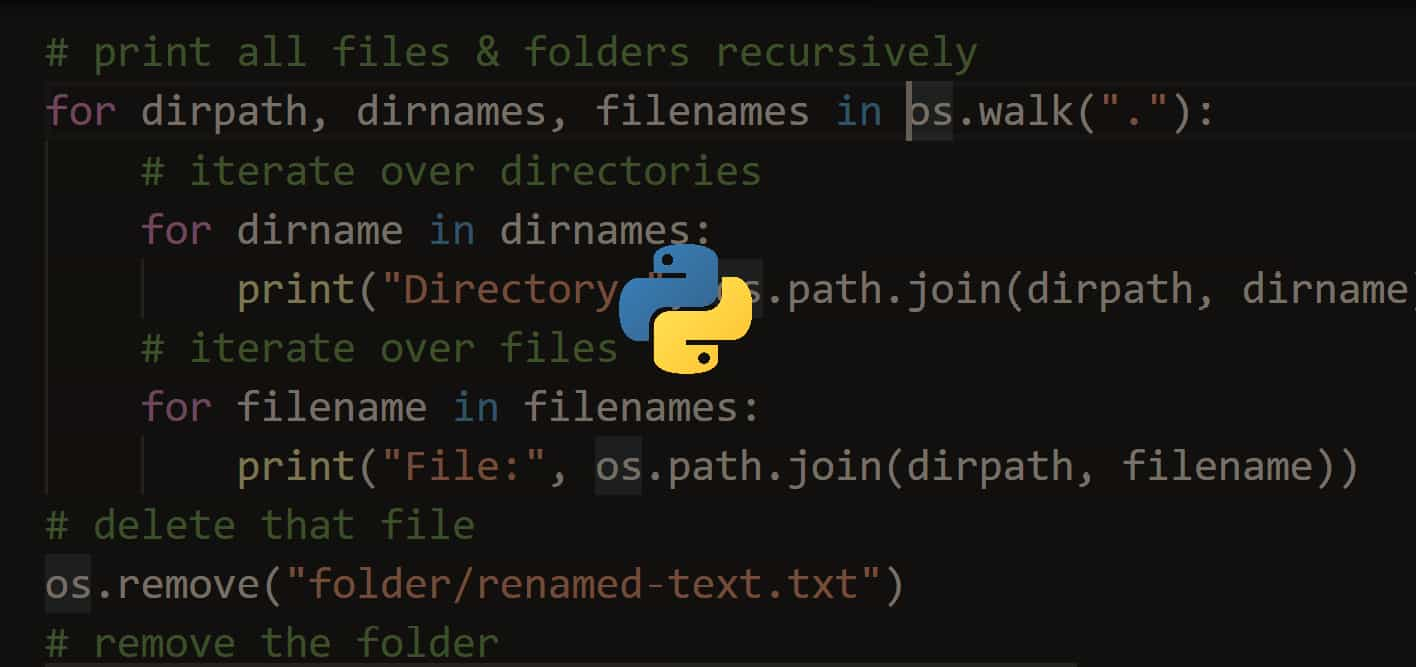

For now, we'll learn how to load datasets from different sources like CSV files, databases, and Excel spreadsheets into Pandas DataFrames. This structures our data into an easily analyzable tabular format.

We'll start by loading a sample CSV dataset containing housing price data for different regions. Using Pandas' read_csv() method makes this a breeze:

housing = pd.read_csv('housing.csv')With our DataFrame loaded, we can now use Pandas' convenient attributes like .head() and .info() to explore the data. Visualizing the data with plots and charts also provides useful insights. This high-level investigation allows us to understand the properties of each variable, spot errors or anomalies, and inform our preprocessing strategy.

Getting intimate with our dataset is a key step before diving into the data-wrangling stages. By loading data and carrying out exploratory analysis, we can identify the appropriate cleaning, transforming, and encoding steps required to prepare the data for feeding into machine learning algorithms.

Handling Missing Data

Now that we've loaded and explored our data, it's time to address one of the most common data preprocessing tasks - handling missing values. Most real-world datasets contain some amount of missing data due to human error, equipment malfunctions, or respondents skipping questions. If not addressed properly, missing data can reduce model accuracy and lead to misleading analytical insights. Some strategies for handling missing values in Python include:

- Deletion - Removing rows or columns containing missing values

- Imputation - Replacing missing values with the mean, median, or mode

- Modeling - Using machine learning to predict missing values based on other columns

For example, we can use Pandas' .dropna() method to easily remove rows with missing data. Alternatively, we can employ scikit-learn's SimpleImputer class to fill in missing values with the median or mode. The best approach depends on the amount and type of missing data. Properly handling missing values ensures our data is complete and prevents misleading models.

Data Transformation

Once the missing data is handled, the next step is data transformation. This process involves modifying the data in a way that enhances its suitability for analysis or model building.

One type of transformation is scaling, which is crucial when dealing with features that exist on different scales. Machine learning algorithms can be biased towards variables with higher magnitudes – think of a dataset that includes age (a two-digit number) and salary (a five or six-digit number).

To prevent this bias, we can scale all variables to have the same range. The MinMaxScaler from the scikit-learn library scales the data between a given minimum and maximum (usually 0 and 1):

scaler = preprocessing.MinMaxScaler()

data['column_name'] = scaler.fit_transform(data[['column_name']])Another transformation is normalization, which adjusts the distribution of data. Certain machine learning algorithms assume that the input data is normally distributed, or follows a Gaussian distribution. If the data does not meet this assumption, we can use the PowerTransformer from scikit-learn to make it more Gaussian-like:

norm = preprocessing.PowerTransformer()

data['column_name'] = norm.fit_transform(data[['column_name']])Encoding Categorical Data

Most interesting datasets contain categorical variables like country, genre, or status. Machine learning algorithms prefer numerical data, so encoding categoricals is crucial. In this step, we'll learn techniques for converting categorical columns into numeric formats.

The two main encoding methods are:

- One-hot encoding - Creating new columns indicating the presence (1) or absence (0) of each category value.

- Label encoding - Assigning a numeric code to each unique category label.

In Python, we can implement one-hot encoding using Pandas' get_dummies() method or scikit-learn's OneHotEncoder class. For label encoding, scikit-learn's LabelEncoder works great.

For example, to one-hot encode a "genre" column containing "pop", "rock" and "jazz", we would create three new columns indicating the presence of each genre. Choosing the right encoding approach depends on the downstream uses of the data. By properly encoding categoricals, we prepare our data for modeling success.

Final Thoughts

Mastering data preprocessing is a fundamental step in your data analysis and machine learning journey. It allows you to transform raw, messy data into clean, understandable input that can be used to create accurate and efficient models. While it may seem tedious and uninteresting, proper data preprocessing can make a huge difference in your project's outcome. Put these Python tools into practice, and you'll be well on your way to becoming an expert data wrangler.

Learn also: Data Cleaning with Pandas in Python.

Happy coding ♥

Found the article interesting? You'll love our Python Code Generator! Give AI a chance to do the heavy lifting for you. Check it out!

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!