Kickstart your coding journey with our Python Code Assistant. An AI-powered assistant that's always ready to help. Don't miss out!

Speech recognition is the ability of computer software to identify words and phrases in spoken language and convert them to human-readable text. In this tutorial, you will learn how you can convert speech to text in Python using the SpeechRecognition library.

As a result, we do not need to build any machine learning model from scratch, this library provides us with convenient wrappers for various well-known public speech recognition APIs (such as Google Cloud Speech API, IBM Speech To Text, etc.).

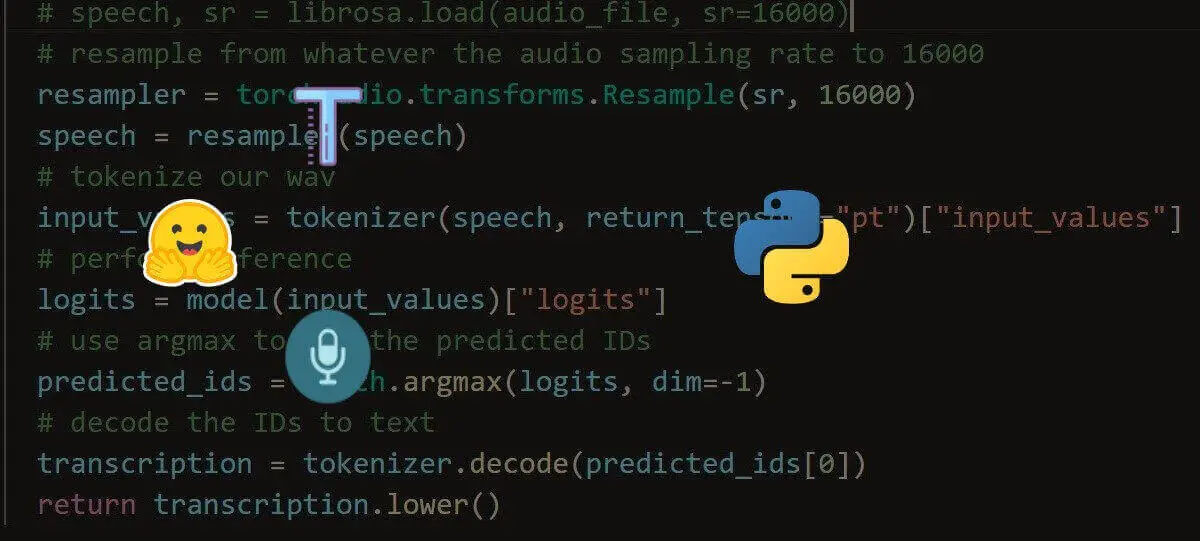

Note that if you do not want to use APIs, and directly perform inference on machine learning models instead, then definitely check this tutorial, in which I'll show you how you can use the current state-of-the-art machine learning model to perform speech recognition in Python.

Also, if you want other methods to do ASR, then check this speech recognition comprehensive tutorial.

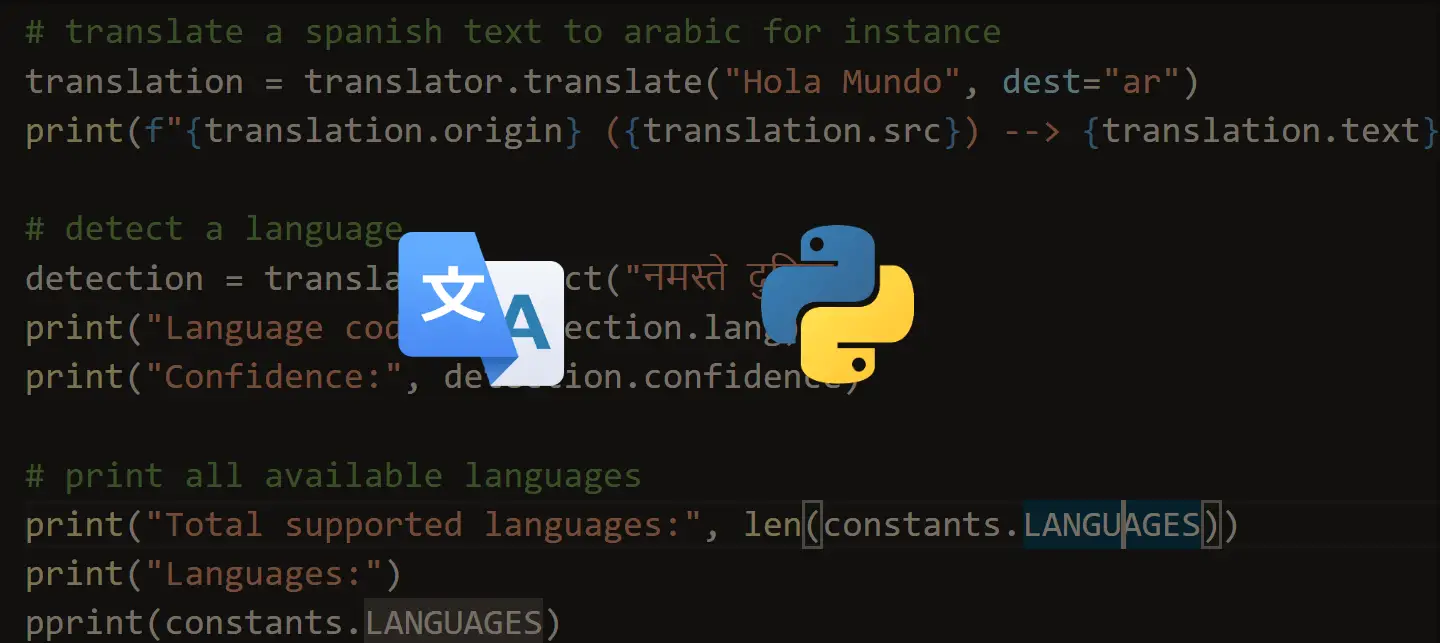

Learn also: How to Translate Text in Python.

Getting Started

Alright, let's get started, installing the library using pip:

pip3 install SpeechRecognition pydubOkay, open up a new Python file and import it:

import speech_recognition as srThe nice thing about this library is it supports several recognition engines:

- CMU Sphinx (offline)

- Google Speech Recognition

- Google Cloud Speech API

- Wit.ai

- Microsoft Bing Voice Recognition

- Houndify API

- IBM Speech To Text

- Snowboy Hotword Detection (offline)

We gonna use Google Speech Recognition here, as it's straightforward and doesn't require any API key.

Transcribing an Audio File

Make sure you have an audio file in the current directory that contains English speech (if you want to follow along with me, get the audio file here):

filename = "16-122828-0002.wav"This file was grabbed from the LibriSpeech dataset, but you can use any audio WAV file you want, just change the name of the file, let's initialize our speech recognizer:

# initialize the recognizer

r = sr.Recognizer()The below code is responsible for loading the audio file, and converting the speech into text using Google Speech Recognition:

# open the file

with sr.AudioFile(filename) as source:

# listen for the data (load audio to memory)

audio_data = r.record(source)

# recognize (convert from speech to text)

text = r.recognize_google(audio_data)

print(text)This will take a few seconds to finish, as it uploads the file to Google and grabs the output, here is my result:

I believe you're just talking nonsenseThe above code works well for small or medium size audio files. In the next section, we gonna write code for large files.

Transcribing Large Audio Files

If you want to perform speech recognition of a long audio file, then the below function handles that quite well:

# importing libraries

import speech_recognition as sr

import os

from pydub import AudioSegment

from pydub.silence import split_on_silence

# create a speech recognition object

r = sr.Recognizer()

# a function to recognize speech in the audio file

# so that we don't repeat ourselves in in other functions

def transcribe_audio(path):

# use the audio file as the audio source

with sr.AudioFile(path) as source:

audio_listened = r.record(source)

# try converting it to text

text = r.recognize_google(audio_listened)

return text

# a function that splits the audio file into chunks on silence

# and applies speech recognition

def get_large_audio_transcription_on_silence(path):

"""Splitting the large audio file into chunks

and apply speech recognition on each of these chunks"""

# open the audio file using pydub

sound = AudioSegment.from_file(path)

# split audio sound where silence is 500 miliseconds or more and get chunks

chunks = split_on_silence(sound,

# experiment with this value for your target audio file

min_silence_len = 500,

# adjust this per requirement

silence_thresh = sound.dBFS-14,

# keep the silence for 1 second, adjustable as well

keep_silence=500,

)

folder_name = "audio-chunks"

# create a directory to store the audio chunks

if not os.path.isdir(folder_name):

os.mkdir(folder_name)

whole_text = ""

# process each chunk

for i, audio_chunk in enumerate(chunks, start=1):

# export audio chunk and save it in

# the `folder_name` directory.

chunk_filename = os.path.join(folder_name, f"chunk{i}.wav")

audio_chunk.export(chunk_filename, format="wav")

# recognize the chunk

try:

text = transcribe_audio(chunk_filename)

except sr.UnknownValueError as e:

print("Error:", str(e))

else:

text = f"{text.capitalize()}. "

print(chunk_filename, ":", text)

whole_text += text

# return the text for all chunks detected

return whole_textNote: You need to install Pydub using pip for the above code to work.

The above function uses split_on_silence() function from pydub.silence module to split audio data into chunks on silence. The min_silence_len parameter is the minimum length of silence in milliseconds to be used for a split.

silence_thresh is the threshold in which anything quieter than this will be considered silence, I have set it to the average dBFS minus 14, keep_silence argument is the amount of silence to leave at the beginning and the end of each chunk detected in milliseconds.

These parameters won't be perfect for all sound files, try to experiment with these parameters with your large audio needs.

After that, we iterate over all chunks and convert each speech audio into text, and then add them up altogether, here is an example run:

path = "7601-291468-0006.wav"

print("\nFull text:", get_large_audio_transcription_on_silence(path))Note: You can get 7601-291468-0006.wav file here.

Output:

audio-chunks\chunk1.wav : His abode which you had fixed in a bowery or country seat.

audio-chunks\chunk2.wav : At a short distance from the city.

audio-chunks\chunk3.wav : Just at what is now called dutch street.

audio-chunks\chunk4.wav : Sooner bounded with proofs of his ingenuity.

audio-chunks\chunk5.wav : Patent smokejacks.

audio-chunks\chunk6.wav : It required a horse to work some.

audio-chunks\chunk7.wav : Dutch oven roasted meat without fire.

audio-chunks\chunk8.wav : Carts that went before the horses.

audio-chunks\chunk9.wav : Weather cox that turned against the wind and other wrongheaded contrivances.

audio-chunks\chunk10.wav : So just understand can found it all beholders.

Full text: His abode which you had fixed in a bowery or country seat. At a short distance from the city. Just at what is now called dutch street. Sooner bounded with proofs of his ingenuity. Patent smokejacks. It required a horse to work some. Dutch oven roasted meat without fire. Carts that went before the horses. Weather cox that turned against the wind and other wrongheaded contrivances. So just understand can found it all beholders.So, this function automatically creates a folder for us and puts the chunks of the original audio file we specified, and then it runs speech recognition on all of them.

In case you want to split the audio file into fixed intervals, we can use the below function instead:

# a function that splits the audio file into fixed interval chunks

# and applies speech recognition

def get_large_audio_transcription_fixed_interval(path, minutes=5):

"""Splitting the large audio file into fixed interval chunks

and apply speech recognition on each of these chunks"""

# open the audio file using pydub

sound = AudioSegment.from_file(path)

# split the audio file into chunks

chunk_length_ms = int(1000 * 60 * minutes) # convert to milliseconds

chunks = [sound[i:i + chunk_length_ms] for i in range(0, len(sound), chunk_length_ms)]

folder_name = "audio-fixed-chunks"

# create a directory to store the audio chunks

if not os.path.isdir(folder_name):

os.mkdir(folder_name)

whole_text = ""

# process each chunk

for i, audio_chunk in enumerate(chunks, start=1):

# export audio chunk and save it in

# the `folder_name` directory.

chunk_filename = os.path.join(folder_name, f"chunk{i}.wav")

audio_chunk.export(chunk_filename, format="wav")

# recognize the chunk

try:

text = transcribe_audio(chunk_filename)

except sr.UnknownValueError as e:

print("Error:", str(e))

else:

text = f"{text.capitalize()}. "

print(chunk_filename, ":", text)

whole_text += text

# return the text for all chunks detected

return whole_textThe above function splits the large audio file into chunks of 5 minutes. You can change the minutes parameter to fit your needs. Since my audio file isn't that large, I'm trying to split it into chunks of 10 seconds:

print("\nFull text:", get_large_audio_transcription_fixed_interval(path, minutes=1/6))Output:

audio-fixed-chunks\chunk1.wav : His abode which you had fixed in a bowery or country seat at a short distance from the city just that one is now called.

audio-fixed-chunks\chunk2.wav : Dutch street soon abounded with proofs of his ingenuity patent smokejacks that required a horse to work some.

audio-fixed-chunks\chunk3.wav : Oven roasted meat without fire carts that went before the horses weather cox that turned against the wind and other wrong

head.

audio-fixed-chunks\chunk4.wav : Contrivances that astonished and confound it all beholders.

Full text: His abode which you had fixed in a bowery or country seat at a short distance from the city just that one is now called. Dutch street soon abounded with proofs of his ingenuity patent smokejacks that required a horse to work some. Oven roasted meat without fire carts that went before the horses weather cox that turned against the wind and other wrong head. Contrivances that astonished and confound it all beholders.Reading from the Microphone

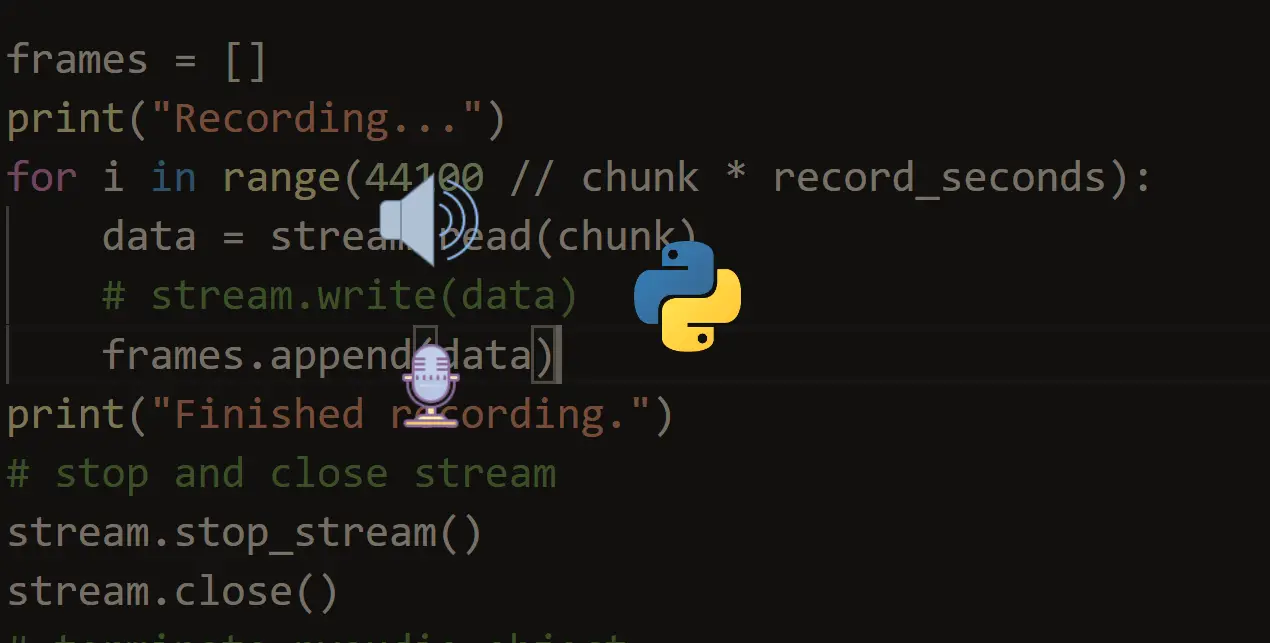

This requires PyAudio to be installed on your machine, here is the installation process depending on your operating system:

Windows

You can just pip install it:

$ pip3 install pyaudioLinux

You need to first install the dependencies:

$ sudo apt-get install python-pyaudio python3-pyaudio

$ pip3 install pyaudioMacOS

You need to first install portaudio, then you can just pip install it:

$ brew install portaudio

$ pip3 install pyaudioNow let's use our microphone to convert our speech:

import speech_recognition as sr

with sr.Microphone() as source:

# read the audio data from the default microphone

audio_data = r.record(source, duration=5)

print("Recognizing...")

# convert speech to text

text = r.recognize_google(audio_data)

print(text)This will hear from your microphone for 5 seconds and then try to convert that speech into text!

It is pretty similar to the previous code, but we are using the Microphone() object here to read the audio from the default microphone, and then we used the duration parameter in the record() function to stop reading after 5 seconds and then upload the audio data to Google to get the output text.

You can also use the offset parameter in the record() function to start recording after offset seconds.

Also, you can recognize different languages by passing the language parameter to the recognize_google() function. For instance, if you want to recognize Spanish speech, you would use:

text = r.recognize_google(audio_data, language="es-ES")Check out supported languages in this StackOverflow answer.

Conclusion

As you can see, it is pretty easy and simple to use this library for converting speech to text. This library is widely used out there in the wild. Check the official documentation.

If you want to convert text to speech in Python as well, check this tutorial.

Read Also: How to Recognize Optical Characters in Images in Python.

Happy Coding ♥

Just finished the article? Now, boost your next project with our Python Code Generator. Discover a faster, smarter way to code.

View Full Code Switch My Framework

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!