Struggling with multiple programming languages? No worries. Our Code Converter has got you covered. Give it a go!

Automatic Speech Recognition (ASR) is the technology that allows us to convert human speech into digital text. This tutorial will dive into using the Huggingface Transformers library in Python to perform speech recognition using two of the most-known and state-of-the-art models, which are Wav2Vec 2.0 and Whisper.

Please note that if you want other methods to do ASR, then check this speech recognition comprehensive tutorial.

Wav2Vec2 is a pre-trained model that was trained on speech audio alone (self-supervised) and then followed by fine-tuning on transcribed speech data (LibriSpeech dataset). It has outperformed previous semi-supervised models.

As in Masked Language Modeling, Wav2Vec2 encodes speech audio via a multi-layer convolutional neural network and then masks spans of the resulting latent speech representations. These representations are then fed to a Transformer network to build contextualized representations; check the Wav2Vec2 paper for more information.

Whisper, on the other hand, is a general-purpose speech recognition transformer model, trained on a large dataset of diverse weakly supervised audio (680,000 hours) on multiple languages and also multiple tasks (speech recognition, speech translation, language identification, and voice activity detection).

Whisper models exhibit a robust capacity to adapt to various datasets and fields without requiring fine-tuning. As in the Whisper paper: "The goal of Whisper is to develop a single robust speech processing system that works reliably without the need for dataset-specific fine-tuning to achieve high-quality results on specific distributions".

Table of contents

- Getting Started

- Using Wav2Vec 2.0 Models

- Using Whisper Models

- Transcribing your Voice

- Transcribing Long Audio Samples

- Conclusion

Getting Started

To get started, let's install the required libraries:

$ pip install transformers==4.28.1 soundfile sentencepiece torchaudio pydubWe'll be using torchaudio for loading audio files. Note that you need to install PyAudio if you're going to use the code on your environment and PyDub if you're on a Colab environment. We are going to use them for recording from the microphone in Python.

Let's import our libraries:

from transformers import *

import torch

import soundfile as sf

# import librosa

import os

import torchaudio

device = "cuda:0" if torch.cuda.is_available() else "cpu"Using Wav2Vec 2.0 Models

Loading the Model

Next, loading the processor and the model weights of wav2vec2:

# wav2vec2_model_name = "facebook/wav2vec2-base-960h" # 360MB

wav2vec2_model_name = "facebook/wav2vec2-large-960h-lv60-self" # pretrained 1.26GB

# wav2vec2_model_name = "jonatasgrosman/wav2vec2-large-xlsr-53-english" # English-only, 1.26GB

# wav2vec2_model_name = "jonatasgrosman/wav2vec2-large-xlsr-53-arabic" # Arabic-only, 1.26GB

# wav2vec2_model_name = "jonatasgrosman/wav2vec2-large-xlsr-53-spanish" # Spanish-only, 1.26GB

wav2vec2_processor = Wav2Vec2Processor.from_pretrained(wav2vec2_model_name)

wav2vec2_model = Wav2Vec2ForCTC.from_pretrained(wav2vec2_model_name).to(device)There are two most used model architectures and weights for wav2vec2. wav2vec2-base-960h is a base architecture with about 360MB of size, it achieved a 3.4% Word Error Rate (WER) on the clean test set and was trained on 960 hours of LibriSpeech dataset on 16kHz sampled speech audio.

On the other hand, wav2vec2-large-960h-lv60-self is a larger model with about 1.18GB in size (probably won't fit your laptop RAM) but achieved 1.9% WER (the lower, the better) on the clean test set. So this one is much better for recognition but heavier and takes more time for inference. Feel free to choose which one suits you best.

If you want a language-specific model, then make sure you use a fine-tuned version of Wav2Vec 2.0, you can browse the list of models of each language here.

Wav2Vec2 was trained using Connectionist Temporal Classification (CTC), so that's why we're using the Wav2Vec2ForCTC class for loading the model.

Next, here are some audio samples:

# audio_url = "https://github.com/x4nth055/pythoncode-tutorials/raw/master/machine-learning/speech-recognition/16-122828-0002.wav"

audio_url = "https://github.com/x4nth055/pythoncode-tutorials/raw/master/machine-learning/speech-recognition/30-4447-0004.wav"

# audio_url = "https://github.com/x4nth055/pythoncode-tutorials/raw/master/machine-learning/speech-recognition/7601-291468-0006.wav"Preparing the Audio File

Feel free to choose any of the above audio files. The below cell loads the audio file:

# load our wav file

speech, sr = torchaudio.load(audio_url)

speech = speech.squeeze()

# or using librosa

# speech, sr = librosa.load(audio_file, sr=16000)

sr, speech.shape(16000, torch.Size([274000]))The torchaudio.load() function loads the audio file and returns the audio as a vector and the sample rate. It also automatically downloads the file if it's a URL. If it's a path in the disk, it will also load it.

Note we use the squeeze() method as well, it is to remove the dimensions with the size of 1. i.e., converting tensor from (1, 274000) to (274000,).

Next, we need to make sure the input audio file to the model has the sample rate of 16000Hz because wav2vec2 is trained on that:

# resample from whatever the audio sampling rate to 16000

resampler = torchaudio.transforms.Resample(sr, 16000)

speech = resampler(speech)

speech.shapetorch.Size([274000])We used Resample from torchaudio.transforms, which helps us to convert the loaded audio file on the fly from one sampling rate to another.

Before we make the inference, we pass the audio vector to the wav2vec2 processor:

# tokenize our wav

input_values = wav2vec2_processor(speech, return_tensors="pt", sampling_rate=16000)["input_values"].to(device)

input_values.shapetorch.Size([1, 274000])We specify the sampling_rate and pass "pt" to return_tensors argument to get PyTorch tensors in the results.

Performing Inference

Let's pass the vector into our model now:

# perform inference

logits = wav2vec2_model(input_values)["logits"]

logits.shapetorch.Size([1, 856, 32])Passing the logits to torch.argmax() to get the likely prediction:

# use argmax to get the predicted IDs

predicted_ids = torch.argmax(logits, dim=-1)

predicted_ids.shapetorch.Size([1, 856, 32])Decoding them back into text, we also lower the text, as it's in all caps:

# decode the IDs to text

transcription = processor.decode(predicted_ids[0])

transcription.lower()and missus goddard three ladies almost always at the service of an invitation from hartfield and who were fetched and carried home so often that mister woodhouse thought it no hardship for either james or the horses had it taken place only once a year it would have been a grievanceWrapping up the Code

Now let's collect all our previous code into a single function, which accepts the audio path, the model and the processor and returns the transcription:

def load_audio(audio_path):

"""Load the audio file & convert to 16,000 sampling rate"""

# load our wav file

speech, sr = torchaudio.load(audio_path)

resampler = torchaudio.transforms.Resample(sr, 16000)

speech = resampler(speech)

return speech.squeeze()

def get_transcription_wav2vec2(audio_path, model, processor):

speech = load_audio(audio_path)

input_features = processor(speech, return_tensors="pt", sampling_rate=16000)["input_values"].to(device)

# perform inference

logits = model(input_features)["logits"]

# use argmax to get the predicted IDs

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)[0]

return transcription.lower()The load_audio() function is responsible for loading the audio file and converting it to a 16hz sampling rate.

Awesome, you can pass any audio speech file path to the get_transcription_wav2vec2() function now:

get_transcription_wav2vec2("http://www0.cs.ucl.ac.uk/teaching/GZ05/samples/lathe.wav",

wav2vec2_model,

wav2vec2_processor)a late is a big tool grab every dish of sugarUsing Whisper Models

The cool thing about Whisper is that there is a single model for almost all the world languages, and you only have to specify the language by prepending the decoding with special language tokens.

The below tables show the different Whisper models, their number of parameters, size, and links to multilingual and English-only models:

| Model | Number of Parameters | Size | Multilingual model | English-only model |

| Tiny | 39M | ~ 151MB | openai/whisper-tiny |

openai/whisper-tiny.en |

| Base | 74M | ~ 290MB | openai/whisper-base |

openai/whisper-base.en |

| Small | 244M | ~ 967MB | openai/whisper-small |

openai/whisper-small.en |

| Medium | 769M | ~ 3.06GB | openai/whisper-medium |

openai/whisper-medium.en |

| Large | 1550M | ~ 6.17GB |

openai/whisper-large-v2 |

N/A |

Note that there isn't a large Whisper model that is English-only, as the multilingual version is sufficient. The highlighted model is the one I'm going to use for the rest of the tutorial.

Loading the Model

Let's load the openai/whisper-medium one:

# whisper_model_name = "openai/whisper-tiny.en" # English-only, ~ 151 MB

# whisper_model_name = "openai/whisper-base.en" # English-only, ~ 290 MB

# whisper_model_name = "openai/whisper-small.en" # English-only, ~ 967 MB

# whisper_model_name = "openai/whisper-medium.en" # English-only, ~ 3.06 GB

# whisper_model_name = "openai/whisper-tiny" # multilingual, ~ 151 MB

# whisper_model_name = "openai/whisper-base" # multilingual, ~ 290 MB

# whisper_model_name = "openai/whisper-small" # multilingual, ~ 967 MB

whisper_model_name = "openai/whisper-medium" # multilingual, ~ 3.06 GB

# whisper_model_name = "openai/whisper-large-v2" # multilingual, ~ 6.17 GB

# load the whisper model and tokenizer

whisper_processor = WhisperProcessor.from_pretrained(whisper_model_name)

whisper_model = WhisperForConditionalGeneration.from_pretrained(whisper_model_name).to(device)We use the WhisperProcessor to process our audio file, and WhisperForConditionalGeneration for loading the model.

If get an out-of-memory error, you're free to use a smaller Whisper model, such as openai/whisper-tiny.

Preparing the Audio File

Let's use the same audio_url defined earlier. First, extracting the audio features:

# get the input features

input_features = whisper_processor(load_audio(audio_url), sampling_rate=16000, return_tensors="pt").input_features.to(device)

input_features.shapeOutput:

torch.Size([1, 80, 3000])Second, we get special decoder tokens, to begin with during inference:

# get special decoder tokens for the language

forced_decoder_ids = whisper_processor.get_decoder_prompt_ids(language="english", task="transcribe")

forced_decoder_idsWe set the language to "english" and the task to "transcribe" for English speech recognition. Here's the output:

[(1, 50259), (2, 50359), (3, 50363)]These are the special tokens for the beginning of transcription, the language, and the task, in this order.

Performing Inference

Now let's perform inference:

# perform inference

predicted_ids = whisper_model.generate(input_features, forced_decoder_ids=forced_decoder_ids)

predicted_ids.shapeThis time, we use the model.generate() to generate the speech transcription, which is explained in the text generation tutorial.

Finally, we decode the predicted IDs into text:

# decode the IDs to text

transcription = whisper_processor.batch_decode(predicted_ids, skip_special_tokens=True)

transcriptionOutput:

[' and Mrs. Goddard, three ladies almost always at the service of an invitation from Hartfield, and who were fetched and carried home so often that Mr. Woodhouse sought it no hardship for either James or the horses. Had it taken place only once a year it would have been a grievance.']

You can also set skip_special_tokens=False, to see the special tokens I've been talking about:

# decode the IDs to text with special tokens

transcription = whisper_processor.batch_decode(predicted_ids, skip_special_tokens=False)

transcriptionOutput:

['<|startoftranscript|><|en|><|transcribe|><|notimestamps|> and Mrs. Goddard, three ladies almost always at the service of an invitation from Hartfield, and who were fetched and carried home so often that Mr. Woodhouse sought it no hardship for either James or the horses. Had it taken place only once a year it would have been a grievance.<|endoftext|>']

Wrapping up the Code

Let's now wrap everything into a single function, as previously:

def get_transcription_whisper(audio_path, model, processor, language="english", skip_special_tokens=True):

# resample from whatever the audio sampling rate to 16000

speech = load_audio(audio_path)

# get the input features

input_features = processor(speech, return_tensors="pt", sampling_rate=16000).input_features.to(device)

# get special decoder tokens for the language

forced_decoder_ids = processor.get_decoder_prompt_ids(language=language, task="transcribe")

# perform inference

predicted_ids = model.generate(input_features, forced_decoder_ids=forced_decoder_ids)

# decode the IDs to text

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=skip_special_tokens)[0]

return transcriptionYou can skip the part about forced_decoder_ids, and the model will automatically detect the spoken language.

Recognizing Arabic speech:

arabic_transcription = get_transcription_whisper("https://datasets-server.huggingface.co/assets/arabic_speech_corpus/--/clean/train/0/audio/audio.wav",

whisper_model,

whisper_processor,

language="arabic",

skip_special_tokens=True)

arabic_transcription.نرقلا اذه لاوط عافترالا يف ةبوترلا تايوتسمو ةرارحلا تاجرد رمتست نأ مولعلل ةينيصلا ةيميداكألا يف تبتلا ةبضه ثاحبأ دهعم هدعأ يذلا ريرقتلا حجروSpanish:

spanish_transcription = get_transcription_whisper("https://www.lightbulblanguages.co.uk/resources/sp-audio/cual-es-la-fecha-cumple.mp3",

whisper_model,

whisper_processor,

language="spanish",

skip_special_tokens=True)

spanish_transcription ¿Cuál es la fecha de tu cumpleaños?You can see the available languages that are supported by Whisper:

from transformers.models.whisper.tokenization_whisper import TO_LANGUAGE_CODE

# supported languages

TO_LANGUAGE_CODE Output:

{'english': 'en',

'chinese': 'zh',

'german': 'de',

'spanish': 'es',

'russian': 'ru',

'korean': 'ko',

'french': 'fr',

'japanese': 'ja',

'portuguese': 'pt',

'turkish': 'tr',

'polish': 'pl',

'catalan': 'ca',

'dutch': 'nl',

'arabic': 'ar',

...<SNIPPED>...

'moldavian': 'ro',

'moldovan': 'ro',

'sinhalese': 'si',

'castilian': 'es'}Transcribing your Voice

if you want to use your voice, I have prepared a code snippet at the end of the notebooks to record with your microphone. First, cloning the repo:

!git clone -q --depth 1 https://github.com/snakers4/silero-models

%cd silero-modelsCode for recording in the notebook:

from IPython.display import Audio, display, clear_output

from colab_utils import record_audio

import ipywidgets as widgets

from scipy.io import wavfile

import numpy as np

record_seconds = 20#@param {type:"number", min:1, max:10, step:1}

sample_rate = 16000

def _record_audio(b):

clear_output()

audio = record_audio(record_seconds)

display(Audio(audio, rate=sample_rate, autoplay=True))

wavfile.write('recorded.wav', sample_rate, (32767*audio).numpy().astype(np.int16))

button = widgets.Button(description="Record Speech")

button.on_click(_record_audio)

display(button)Starting recording for 20 seconds...

Finished recording!Let's run both models on the recorded audio:

print("Whisper:", get_transcription_whisper("recorded.wav", whisper_model, whisper_processor))

print("Wav2vec2:", get_transcription_wav2vec2("recorded.wav", wav2vec2_model, wav2vec2_processor))Output:

Whisper: In 1905, Einstein published four groundbreaking papers. These outlined the theory of the photoelectric effect, explained Brownian motion, introduced special relativity, and demonstrated mass-energy equivalence. Einstein thought that the laws of

Wav2vec2: in nineteen o five ennstein published foreground brickin papers thise outlined the theory of the photo electric effect explained brownin motion introduced special relativity and demonstrated mass energy equivalents ennstein thought that the lawsTranscribing Long Audio Samples

To transcribe longer audio samples (longer than 30 seconds), then we have to use the 🤗 pipeline API which contains the necessary code for chunking. All we have to worry about is the chunk_length_s and stride_length_s parameters:

# initialize the pipeline

pipe = pipeline("automatic-speech-recognition",

model=whisper_model_name, device=device)def get_long_transcription_whisper(audio_path, pipe, return_timestamps=True,

chunk_length_s=10, stride_length_s=2):

"""Get the transcription of a long audio file using the Whisper model"""

return pipe(load_audio(audio_path).numpy(), return_timestamps=return_timestamps,

chunk_length_s=chunk_length_s, stride_length_s=stride_length_s)I have set the chunk length to 10 seconds, and the stride length to 2 seconds. We are performing inference on overlapping chunks so that the model has context in the center, for more details I advise you to check this post. You're free to experiment with these parameters of course.

Let's extract the transcription of a sample long audio file using our get_long_transcription_whisper() function we just wrote:

# get the transcription of a sample long audio file

output = get_long_transcription_whisper(

"https://www.voiptroubleshooter.com/open_speech/american/OSR_us_000_0060_8k.wav",

pipe, chunk_length_s=10, stride_length_s=1)This will take a few seconds on a Colab GPU. Notice we passed return_timestamps as True so we can get the transcription of each chunk. Let's explore the output dictionary:

print(output["text"])' The horse trotted around the field at a brisk pace. Find the twin who stole the pearl necklace. Cut the cord that binds the box tightly. The The red tape bound the smuggled food. Look in the corner to find the tan shirt. The cold drizzle will halt the bond drive. Nine men were hired to dig the ruins. The junkyard had a moldy smell. The flint sputtered and lit a pine torch. Soak the cloth and drown the sharp odor..'

Good. Now as chunks:

for chunk in output["chunks"]:

# print the timestamp and the text

print(chunk["timestamp"], ":", chunk["text"])Output:

(0.0, 6.0) : The horse trotted around the field at a brisk pace.

(6.0, 12.8) : Find the twin who stole the pearl necklace.

(12.8, 21.0) : Cut the cord that binds the box tightly. The The red tape bound the smuggled food.

(21.0, 38.0) : Look in the corner to find the tan shirt. The cold drizzle will halt the bond drive. Nine men were hired to dig the ruins.

(38.0, 58.0) : The junkyard had a moldy smell. The flint sputtered and lit a pine torch. Soak the cloth and drown the sharp odor..Conclusion

In this tutorial, we explored Automatic Speech Recognition (ASR) using 🤗 Transformers and two state-of-the-art models: Wav2Vec 2.0 and Whisper. We learned how to load and preprocess audio files, perform speech recognition using both models and decode the model output into human-readable text.

We also showed how you can transcribe your own voice, and do ASR on various global languages. Finally, we used the pipeline API to perform speech recognition on longer audio samples.

For the complete code, feel free to choose the environment you're using:

Note that there are other wav2vec2 weights fine-tuned by other people in different languages than English. Check the models' page and filter on the language of your desire to get the wanted model.

Below are some of our NLP tutorials:

- Named Entity Recognition using Transformers and Spacy in Python.

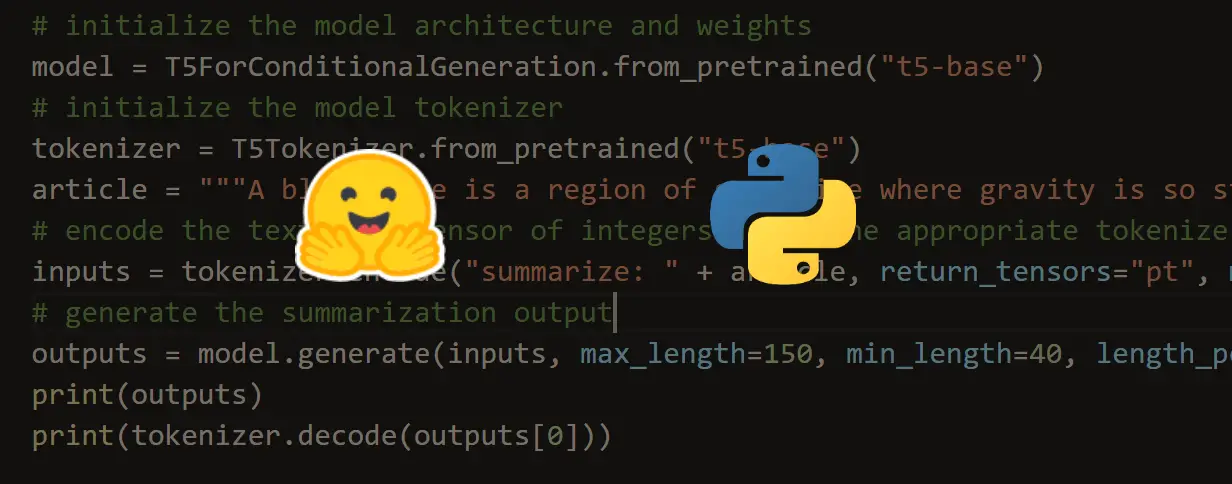

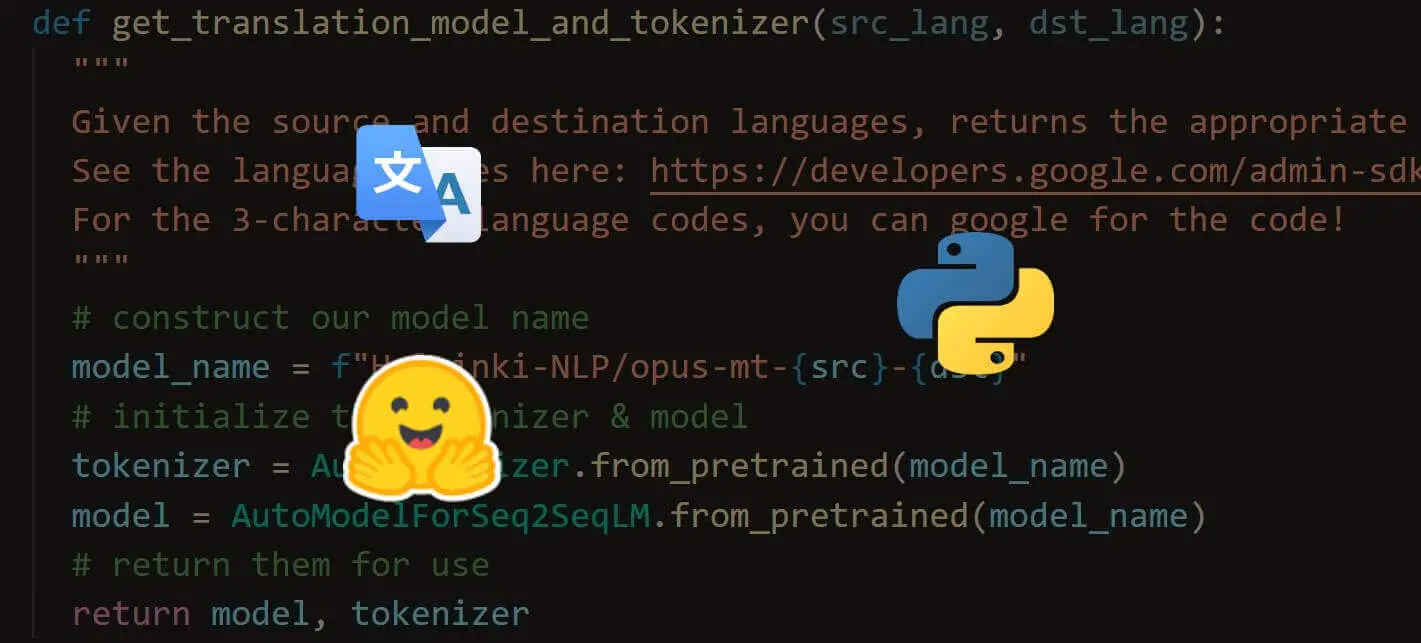

- Machine Translation using Transformers in Python.

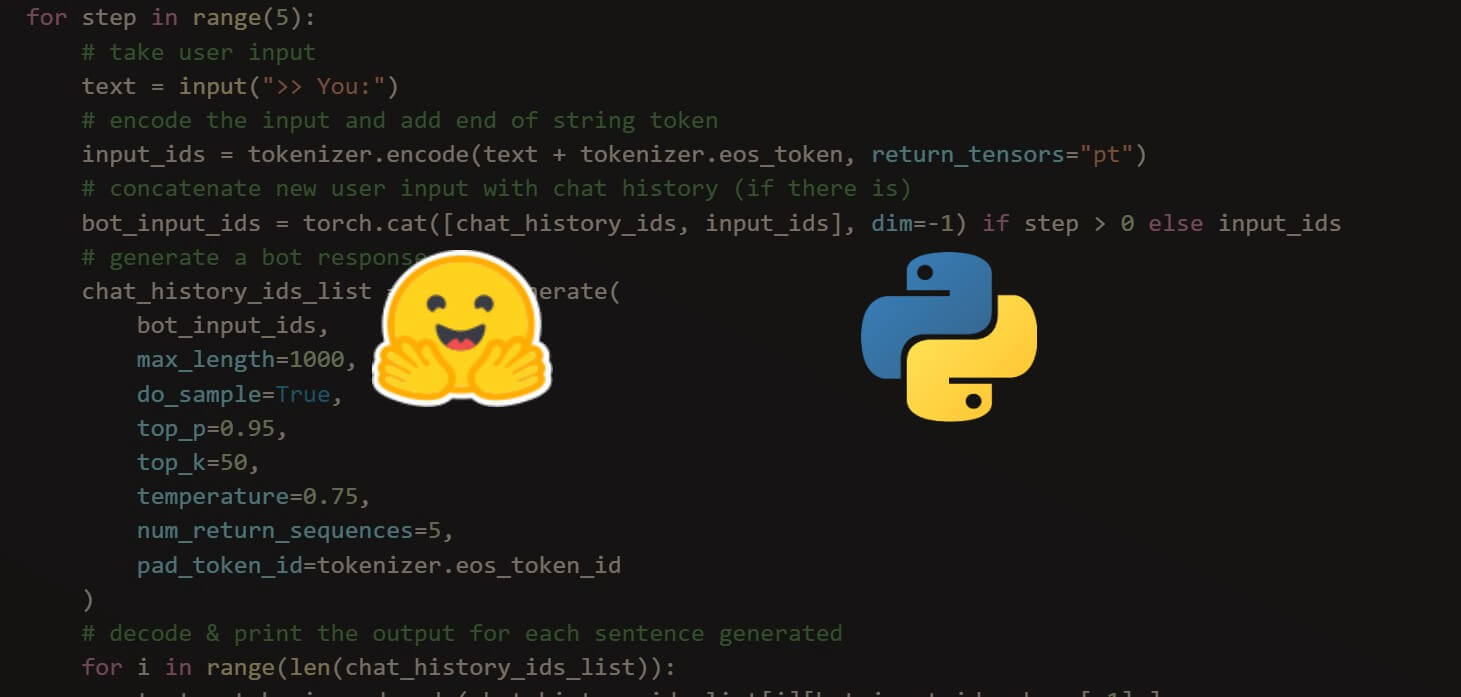

- Text Generation with Transformers in Python.

Happy learning ♥

Finished reading? Keep the learning going with our AI-powered Code Explainer. Try it now!

View Full Code Fix My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!