Unlock the secrets of your code with our AI-powered Code Explainer. Take a look!

Text generation is the task of automatically generating text using machine learning so that it cannot be distinguishable whether it's written by a human or a machine. It is also widely used for text suggestion and completion in various real-world applications.

In recent years, a lot of transformer-based models appeared to be great at this task. One of the most known is the GPT-2 model which was trained on massive unsupervised text, that generates quite impressive text.

Another major breakthrough appeared when OpenAI released GPT-3 paper and its capabilities, this model is too massive that is more than 1400 times larger than its previous version (GPT-2).

Unfortunately, we cannot use GPT-3 as OpenAI did not release the model weights, and even if it did, we as normal people won't be able to have that machine that can load the model weights into the memory, because it's too large.

Luckily, EleutherAI did a great job trying to mimic the capabilities of GPT-3 by releasing the GPT-J model. GPT-J model has 6 billion parameters consisting of 28 layers with a dimension of 4096, it was pre-trained on the Pile dataset, which is a large-scale dataset created by EleutherAI itself.

The Pile dataset is a massive one with a size of over 825GB, it consists of 22 sub-datasets including Wikipedia English (6.38GB), GitHub (95.16GB), Stack Exchange (32.2GB), ArXiv (56.21GB), and more. This explains the amazing performance of GPT-J that you'll hopefully discover in this tutorial.

Related: Image Captioning using PyTorch and Transformers in Python.

In this guide, we're going to perform text generation using GPT-2 as well as EleutherAI models using the Huggingface Transformers library in Python.

The below table shows some of the useful models along with their number of parameters and size, I suggest you choose the largest you can fit in your environment memory:

| Model | Number of Parameters | Size |

gpt2 |

124M | 523MB |

EleutherAI/gpt-neo-125M |

125M | 502MB |

EleutherAI/gpt-neo-1.3B |

1.3B | 4.95GB |

EleutherAI/gpt-neo-2.7B |

2.7B | 9.94GB |

EleutherAI/gpt-j-6B |

6B | 22.5GB |

The EleutherAI/gpt-j-6B model has 22.5GB of size, so make sure you have at least a memory of more than 22.5GB to be able to perform inference on the model. The good news is that Google Colab with the High-RAM option worked for me. If you're not able to load that big model, you can try other smaller versions such as EleutherAI/gpt-neo-2.7B or EleutherAI/gpt-neo-1.3B. The models we gonna use in this tutorial are the highlighted ones in the above table.

Note that this is different from generating AI chatbot conversations using models such as DialoGPT. If you want that, we have a tutorial for it, make sure to check it out.

Let's get started, installing the transformers library:

$ pip install transformersIn this tutorial, we will only use the pipeline API, as it'll be more than enough for text generation.

Let's get started by the standard GPT-2 model:

from transformers import pipeline# download & load GPT-2 model

gpt2_generator = pipeline('text-generation', model='gpt2')First, let's use GPT-2 model to generate 3 different sentences by sampling from the top 50 candidates:

# generate 3 different sentences

# results are sampled from the top 50 candidates

sentences = gpt2_generator("To be honest, neural networks", do_sample=True, top_k=50, temperature=0.6, max_length=128, num_return_sequences=3)

for sentence in sentences:

print(sentence["generated_text"])

print("="*50)Output:

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

To be honest, neural networks are still not very powerful. So they can't perform a lot of complex tasks like predicting the future — and they can't do that. They're just not very good at learning.

Instead, researchers are using the deep learning technology that's built into the brain to learn from the other side of the brain. It's called deep learning, and it's pretty straightforward.

For example, you can do a lot of things that are still not very well understood by the outside world. For example, you can read a lot of information, and it's a lot easier to understand what the person

==================================================

To be honest, neural networks are not perfect, but they are pretty good at it. I've used them to build a lot of things. I've built a lot of things. I'm pretty good at it.

When we talk about what we're doing with our AI, it's kind of like a computer is going to go through a process that you can't see. It's going to have to go through it for you to see it. And then you can see it. And you can see it. It's going to be there for you. And then it's going to be there for you to see it

==================================================

To be honest, neural networks are going to be very interesting to study for a long time. And they're going to be very interesting to read, because they're going to be able to learn a lot from what we've learned. And this is where the challenge is, and this is also something that we've been working on, is that we can take a neural network and make a neural network that's a lot simpler than a neural network, and then we can do that with a lot more complexity.

So, I think it's possible that in the next few years, we may be able to make a neural network that

==================================================I have set top_k to 50 which means we pick the 50 highest probability vocabulary tokens to keep for filtering, we also decrease the temperature to 0.6 (default is 1) to increase the probability of picking high probability tokens, setting it to 0 is the same as greedy search (i.e picking the most probable token)

Notice the third sentence was cut and not completed, you can always increase the max_length to generate more tokens.

By passing the input text to the TextGenerationPipeline (pipeline object), we're passing the arguments to the model.generate() method. Therefore, I highly suggest you check the parameters of the model.generate() reference for a more customized generation. I also suggest you read this blog post explaining most of the decoding techniques the method offers.

Now we have explored GPT-2, it's time to dive into the fascinating GPT-J:

# download & load GPT-J model! It's 22.5GB in size

gpt_j_generator = pipeline('text-generation', model='EleutherAI/gpt-j-6B')The model size is about 22.5GB, make sure your environment is capable of loading the model to the memory, I'm using the High-RAM instance on Google Colab and it's running quite well. However, it may take a while to generate sentences, especially when you pass a higher value of max_length.

Let's pass the same parameters, but with a different prompt:

# generate sentences with TOP-K sampling

sentences = gpt_j_generator("To be honest, robots will", do_sample=True, top_k=50, temperature=0.6, max_length=128, num_return_sequences=3)

for sentence in sentences:

print(sentence["generated_text"])

print("="*50)Output:

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

To be honest, robots will never replace humans.

The reason is simple: We humans are far more complex than the average robot.

The human brain is a marvel of complexity, capable of performing many thousands of tasks simultaneously. There are over 100 billion neurons in the human brain, with over 10,000 connections between each neuron, and neurons are capable of firing over a million times per second.

We have a brain that can reason, learn, and remember things. We can learn and retain information for a lifetime. We can communicate, collaborate, and work together to achieve goals. We can learn languages, play instruments

==================================================

To be honest, robots will probably replace many human jobs.

That's the prediction of a team of economists from the University of Oxford.

They say that in the future we can expect to see more robots doing jobs that are tedious, repetitive and dangerous.

Even something as simple as a housekeeper could be a thing of the past.

The researchers also think that robots will become cheaper and more versatile over time.

The idea of a robot housekeeper has been a popular one.

One company has already started a trial by offering a robot to take care of your home.

But the

==================================================

To be honest, robots will never replace the human workforce. It’s not a matter of if, but when. I can’t believe I’m writing this, but I’m glad I am.

Let’s start with what robots do. Robots are a form of technology. There’s a difference between a technology and a machine. A machine is a physical object designed to perform a specific task. A technology is a system of machines.

For example, a machine can be used for a specific purpose, but it still requires humans to operate it. A technology can be

==================================================Honestly, I can't distinguish whether this is generated by a neural network or written by a human being!

Since GPT-J and other EleutherAI pre-trained models are trained on the Pile dataset, it can not only generate English text, but it can talk anything, let's try to generate Python code:

# generate Python Code!

print(gpt_j_generator(

"""

import os

# make a list of all african countries

""",

do_sample=True, top_k=10, temperature=0.05, max_length=256)[0]["generated_text"])Output:

import os

# make a list of all african countries

african_countries = ['Algeria', 'Angola', 'Benin', 'Burkina Faso', 'Burundi', 'Cameroon', 'Cape Verde', 'Central African Republic', 'Chad', 'Comoros', 'Congo', 'Democratic Republic of Congo', 'Djibouti', 'Egypt', 'Equatorial Guinea', 'Eritrea', 'Ethiopia', 'Gabon', 'Gambia', 'Ghana', 'Guinea', 'Guinea-Bissau', 'Kenya', 'Lesotho', 'Liberia', 'Libya', 'Madagascar', 'Malawi', 'Mali', 'Mauritania', 'Mauritius', 'Morocco', 'Mozambique', 'Namibia', 'Niger', 'Nigeria', 'Rwanda', 'Sao Tome and Principe', 'Senegal', 'Sierra Leone', 'Somalia', 'South Africa', 'South Sudan', 'Sudan', 'Swaziland', 'Tanzania', 'Togo', 'Tunisia',I prompted the model with an import os statement to indicate that's Python code, and I did a comment on listing African countries. Surprisingly, it not only got the syntax of Python right, and generated African countries, but it also listed the countries in Alphabetical order and also chose a suitable variable name!

I definitely invite you to play around with the model and let me know in the comments if you find anything even more interesting.

Notice I have lowered the temperature to 0.05, as this is not really an open-ended generation, I want the African countries to be correct as well as the Python syntax, I have tried increasing the temperature in this type of generation and it led to misleading generation.

One more Python prompt:

print(gpt_j_generator(

"""

import cv2

image = "image.png"

# load the image and flip it

""",

do_sample=True, top_k=10, temperature=0.05, max_length=256)[0]["generated_text"])Output:

import cv2

image = "image.png"

# load the image and flip it

img = cv2.imread(image)

img = cv2.flip(img, 1)

# resize the image to a smaller size

img = cv2.resize(img, (100, 100))

# convert the image to grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# threshold the image to get the black and white image

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY)

# show the image

cv2.imshow("thresh", thresh)

cv2.waitKey(0)

cv2.destroyAllWindows()The model successfully generated working OpenCV code, where it loaded the image, applies the cv2.flip() function to the image and resizes it, and then continue with making the image black and white, interesting.

Next, let's try Bash scripting:

# complete bash script!

print(gpt_j_generator(

"""

# get .py files in /opt directory

ls *.py /opt

# get public ip address

""", max_length=256, top_k=50, temperature=0.05, do_sample=True)[0]["generated_text"])Check this out:

# get .py files in /opt directory

ls *.py /opt

# get public ip address

ip addr show dev eth0 | grep inet | awk '{print $2}' | cut -d/ -f1

# get mac address

ip link show dev eth0 | grep HWaddr | awk '{print $2}' | cut -d/ -f1

# get ip address

ip addr show dev eth0 | grep inet | awk '{print $2}' | cut -d/ -f1The first command worked like a charm in my machine!

Another shell script:

# generating bash script!

print(gpt_j_generator(

"""

# update the repository

sudo apt-get update

# install and start nginx

""", max_length=128, top_k=50, temperature=0.1, do_sample=True)[0]["generated_text"])I have updated the repository using the apt-get command, and prompted to try to generate the commands for installing and starting Nginx, here is the output:

# update the repository

sudo apt-get update

# install and start nginx

sudo apt-get install nginx

sudo service nginx start

# create a new user

sudo useradd -m -s /bin/bash -G sudo -u www-data www-data

# add the user to the sudoers file

sudo visudo

# add the user to the www-data group

sudo usermod -aG www-data www-data

# add the user to the www-data group

sudo usermod -aG www-data www-data

# add theThe model successfully generated the two responsible commands for installing Nginx, and starting the webserver! It then tries to create a user and add it to the sudoers. However, notice the repetition, we can get rid of that by setting the repetition_penalty parameter (default is 1, i.e no penalty), check this paper for more information.

Trying Java now, prompting the model with Java main function wrapped in a Test class and adding a comment to print the first 20 Fibonacci numbers:

# Java code!

print(gpt_j_generator(

"""

public class Test {

public static void main(String[] args){

// printing the first 20 fibonacci numbers

""", max_length=256, top_k=50, temperature=0.1, do_sample=True)[0]["generated_text"])Extraordinarily, the model added the complete Java code for generating Fibonacci numbers:

public class Test {

public static void main(String[] args){

// printing the first 20 fibonacci numbers

for(int i=0;i<20;i++){

System.out.println(fibonacci(i));

}

}

public static int fibonacci(int n){

if(n<2){

return n;

}

else{

return fibonacci(n-1) + fibonacci(n-2);

}

}

}

A:

You need to return the value from the recursive call.

public static int fibonacci(int n){

if(n<2){

return n;

}

else{

return fibonacci(n-1) + fibonacci(n-2);

}

}

A:

You need to return the value from the recursive call.

public static int fibonacci(int n){

if(n<2){

return n;

}

else{I have executed the code before the weird "A:", not only it's a working code, but it generated the correct sequence!

Finally, Let's try generating LaTeX code:

# LATEX!

print(gpt_j_generator(

r"""

% list of Asian countries

\begin{enumerate}

""", max_length=128, top_k=15, temperature=0.1, do_sample=True)[0]["generated_text"])I tried to begin an ordered list in LaTeX, and before that, I added a comment indicating a list of Asian countries, output:

% list of Asian countries

\begin{enumerate}

\item \textbf{India}

\item \textbf{China}

\item \textbf{Japan}

\item \textbf{Korea}

\item \textbf{Taiwan}

\item \textbf{Hong Kong}

\item \textbf{Singapore}

\item \textbf{Malaysia}

\item \textbf{Indonesia}

\item \textbf{Philippines}

\item \textbf{Thailand}

\item \textA correct syntax with the right countries!

Conclusion

Alright, that's it for this tutorial, we had a lot of fun generating such an interesting text.

If you run the above code snippets, you'll definitely get different results than mine, as we're sampling from a token distribution by setting the argument do_sample to True. Make sure you explore different decoding methods by checking this blog post from Huggingface, model.generate() method parameters, and our previous tutorials on the same context:

- Conversational AI Chatbot with Transformers in Python

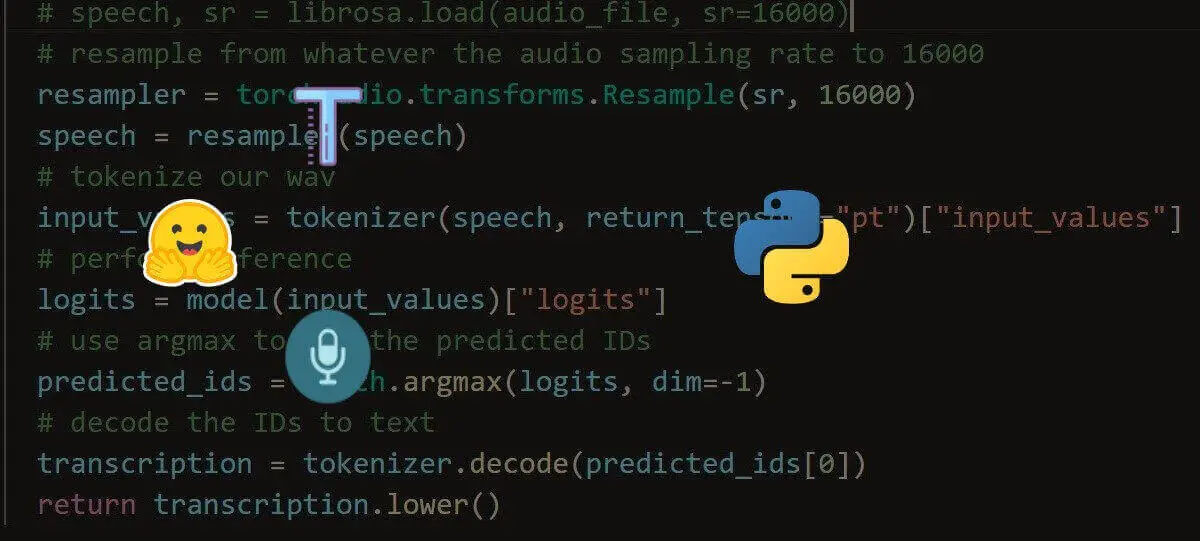

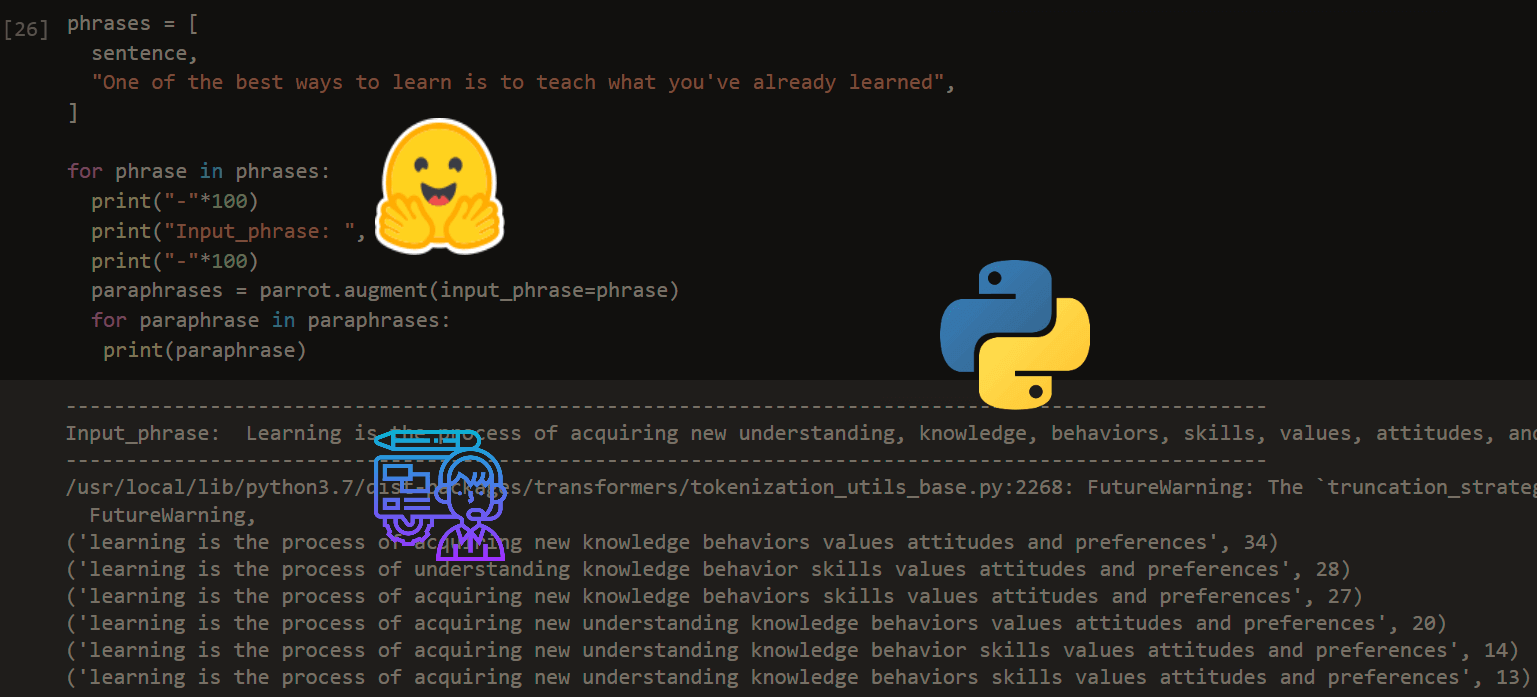

- How to Paraphrase Text using Transformers in Python

- How to Perform Text Summarization using Transformers in Python

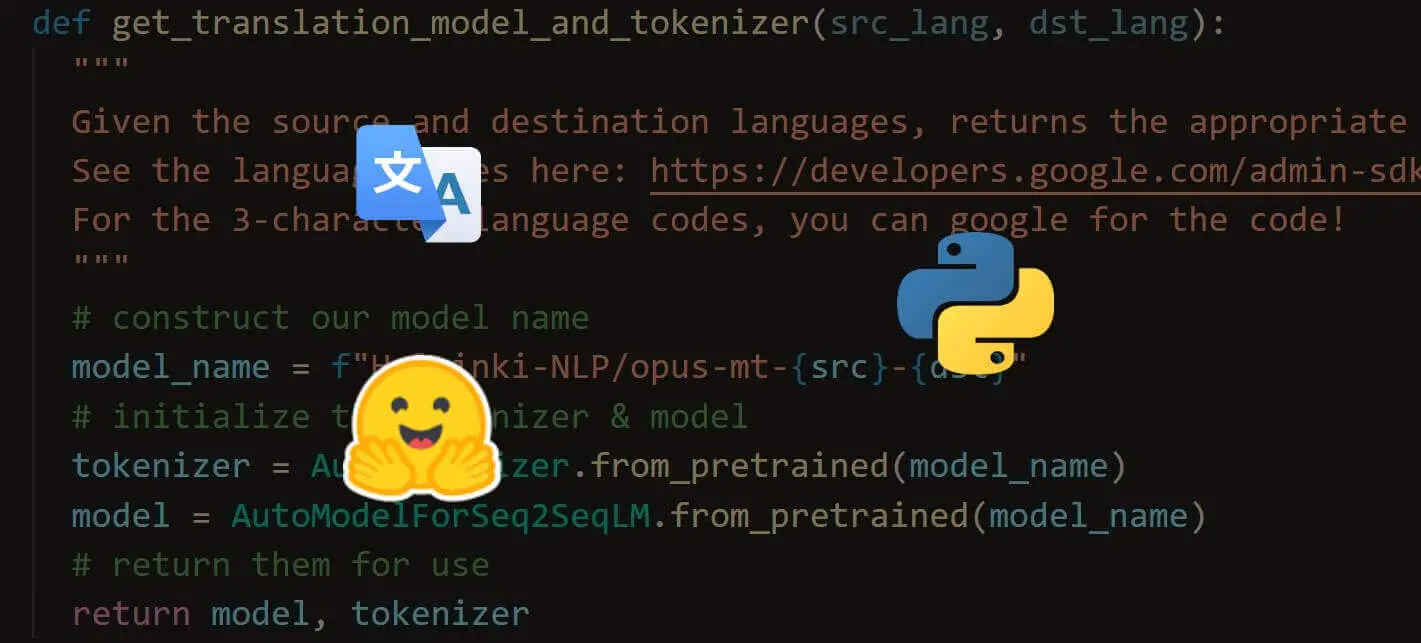

- Machine Translation using Transformers in Python

Check the full code here.

Happy learning ♥

Let our Code Converter simplify your multi-language projects. It's like having a coding translator at your fingertips. Don't miss out!

View Full Code Switch My Framework

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!