Ready to take Python coding to a new level? Explore our Python Code Generator. The perfect tool to get your code up and running in no time. Start now!

Text summarization is the task of shortening long pieces of text into a concise summary that preserves key information content and overall meaning.

There are two different approaches that are widely used for text summarization:

- Extractive Summarization: This is where the model identifies the meaningful sentences and phrases from the original text and only outputs those.

- Abstractive Summarization: The model produces an entirely different text shorter than the original. It generates new sentences in a new form, just like humans do. In this tutorial, we will use transformers for this approach.

This tutorial will use HuggingFace's transformers library in Python to perform abstractive text summarization on any text we want.

We chose HuggingFace's Transformers because it provides us with thousands of pre-trained models not just for text summarization but for a wide variety of NLP tasks, such as text classification, text paraphrasing, question answering machine translation, text generation, chatbot, and more.

If you don't want to write code or host anything, then consider using online tools such as QuestGenius to summarize your text.

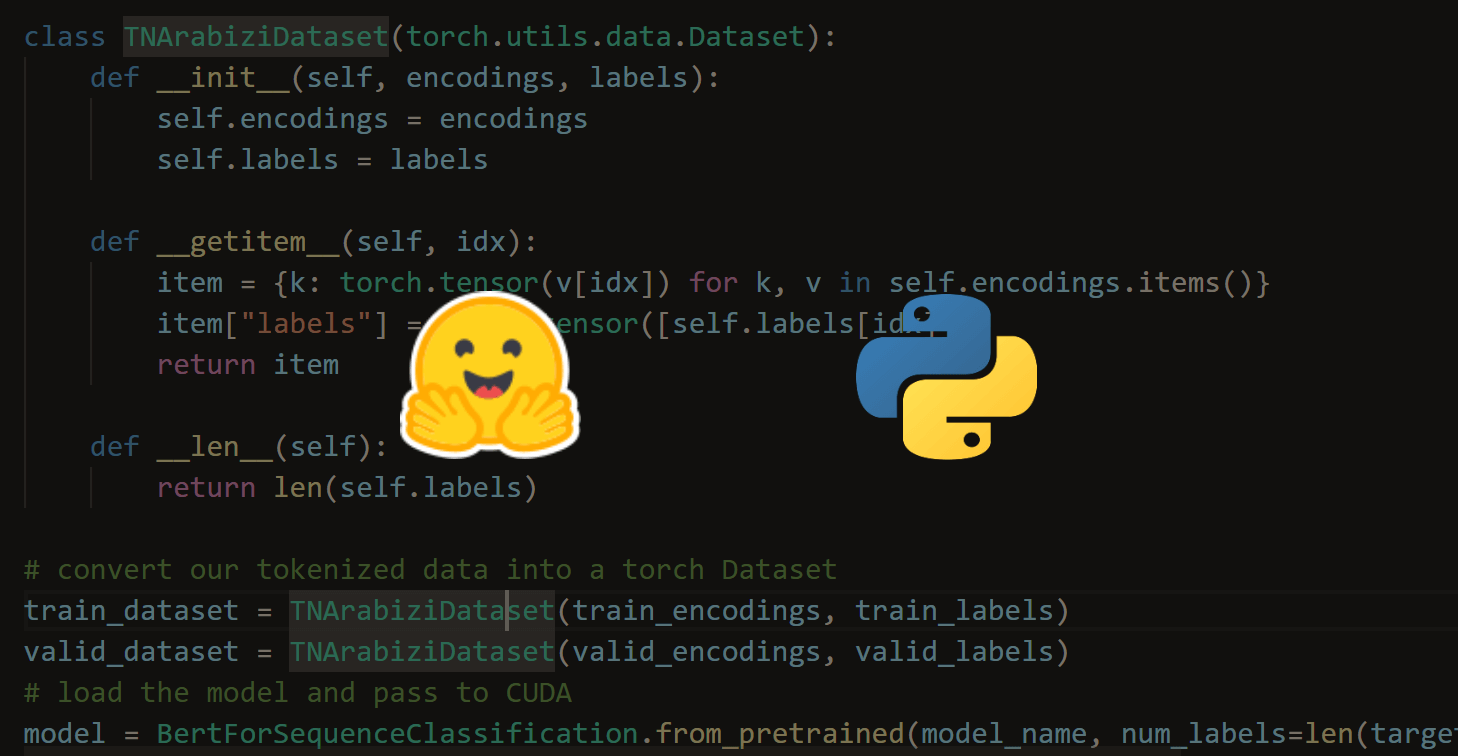

Related: How to Fine Tune BERT for Text Classification using Transformers in Python.

Let's get started, installing the required libraries:

pip3 install transformers torch sentencepieceUsing pipeline API

The most straightforward way to use models in transformers is using the pipeline API:

from transformers import pipeline

# using pipeline API for summarization task

summarization = pipeline("summarization")

original_text = """

Paul Walker is hardly the first actor to die during a production.

But Walker's death in November 2013 at the age of 40 after a car crash was especially eerie given his rise to fame in the "Fast and Furious" film franchise.

The release of "Furious 7" on Friday offers the opportunity for fans to remember -- and possibly grieve again -- the man that so many have praised as one of the nicest guys in Hollywood.

"He was a person of humility, integrity, and compassion," military veteran Kyle Upham said in an email to CNN.

Walker secretly paid for the engagement ring Upham shopped for with his bride.

"We didn't know him personally but this was apparent in the short time we spent with him.

I know that we will never forget him and he will always be someone very special to us," said Upham.

The actor was on break from filming "Furious 7" at the time of the fiery accident, which also claimed the life of the car's driver, Roger Rodas.

Producers said early on that they would not kill off Walker's character, Brian O'Connor, a former cop turned road racer. Instead, the script was rewritten and special effects were used to finish scenes, with Walker's brothers, Cody and Caleb, serving as body doubles.

There are scenes that will resonate with the audience -- including the ending, in which the filmmakers figured out a touching way to pay tribute to Walker while "retiring" his character. At the premiere Wednesday night in Hollywood, Walker's co-star and close friend Vin Diesel gave a tearful speech before the screening, saying "This movie is more than a movie." "You'll feel it when you see it," Diesel said. "There's something emotional that happens to you, where you walk out of this movie and you appreciate everyone you love because you just never know when the last day is you're gonna see them." There have been multiple tributes to Walker leading up to the release. Diesel revealed in an interview with the "Today" show that he had named his newborn daughter after Walker.

Social media has also been paying homage to the late actor. A week after Walker's death, about 5,000 people attended an outdoor memorial to him in Los Angeles. Most had never met him. Marcus Coleman told CNN he spent almost $1,000 to truck in a banner from Bakersfield for people to sign at the memorial. "It's like losing a friend or a really close family member ... even though he is an actor and we never really met face to face," Coleman said. "Sitting there, bringing his movies into your house or watching on TV, it's like getting to know somebody. It really, really hurts." Walker's younger brother Cody told People magazine that he was initially nervous about how "Furious 7" would turn out, but he is happy with the film. "It's bittersweet, but I think Paul would be proud," he said. CNN's Paul Vercammen contributed to this report.

"""

summary_text = summarization(original_text)[0]['summary_text']

print("Summary:", summary_text)Note that the first time you execute this, it'll download the model architecture and the weights and tokenizer configuration.

We specify the "summarization" task to the pipeline, and then we simply pass our long text to it. Here is the output:

Summary: Paul Walker died in November 2013 after a car crash in Los Angeles .

The late actor was one of the nicest guys in Hollywood .

The release of "Furious 7" on Friday offers a chance to grieve again .

There have been multiple tributes to Walker leading up to the film's release .Here is another example:

print("="*50)

# another example

original_text = """

For the first time in eight years, a TV legend returned to doing what he does best.

Contestants told to "come on down!" on the April 1 edition of "The Price Is Right" encountered not host Drew Carey but another familiar face in charge of the proceedings.

Instead, there was Bob Barker, who hosted the TV game show for 35 years before stepping down in 2007.

Looking spry at 91, Barker handled the first price-guessing game of the show, the classic "Lucky Seven," before turning hosting duties over to Carey, who finished up.

Despite being away from the show for most of the past eight years, Barker didn't seem to miss a beat.

"""

summary_text = summarization(original_text)[0]['summary_text']

print("Summary:", summary_text)Output:

==================================================

Summary: Bob Barker returns to "The Price Is Right" for the first time in eight years .

The 91-year-old hosted the show for 35 years before stepping down in 2007 .

Drew Carey finished up hosting duties on the April 1 edition of the game show .

Barker handled the first price-guessing game of the show .Note: I brought the samples from CNN/DailyMail dataset.

As you can see, the model generated an entirely new summarized text that does not belong to the original text.

This is the quickest way to use transformers. In the next section, we will learn another way to perform text summarization and customize how we want to generate the output.

Using T5 Model

The following code cell initializes the T5 transformer model along with its tokenizer:

from transformers import T5ForConditionalGeneration, T5Tokenizer

# initialize the model architecture and weights

model = T5ForConditionalGeneration.from_pretrained("t5-base")

# initialize the model tokenizer

tokenizer = T5Tokenizer.from_pretrained("t5-base")The first time you execute the above code, will download the t5-base model architecture, weights, tokenizer vocabulary, and configuration.

We're using from_pretrained() method to load it as a pre-trained model, T5 comes with three versions in this library, t5-small, which is a smaller version of t5-base, and t5-large that is larger and more accurate than the others.

If you want to do summarization in a different language than English, and if it's not available in the available models, consider pre-training a model from scratch using your dataset. This tutorial will help you do that.

Let's set our text we want to summarize:

article = """

Justin Timberlake and Jessica Biel, welcome to parenthood.

The celebrity couple announced the arrival of their son, Silas Randall Timberlake, in statements to People.

"Silas was the middle name of Timberlake's maternal grandfather Bill Bomar, who died in 2012, while Randall is the musician's own middle name, as well as his father's first," People reports.

The couple announced the pregnancy in January, with an Instagram post. It is the first baby for both.

"""Now let's encode this text to be suitable for the model as an input:

# encode the text into tensor of integers using the appropriate tokenizer

inputs = tokenizer.encode("summarize: " + article, return_tensors="pt", max_length=512, truncation=True)We've used tokenizer.encode() method to convert the string text to a list of integers, where each integer is a unique token.

We set the max_length to 512, indicating that we do not want the original text to bypass 512 tokens; we also set return_tensors to "pt" to get PyTorch tensors as output.

Notice we prepended the text with "summarize: " text, and that's because T5 isn't just for text summarization. You can use it for any text-to-text transformation, such as machine translation or question answering, or even paraphrasing.

For example, we can use the T5 transformer for machine translation, and you can set "translate English to German: " instead of "summarize: " and you'll get a German translation output (more precisely, you'll get a summarized German translation, as you'll see why in model.generate()). For more information about translation, check this tutorial.

Finally, let's generate the summarized text and print it:

# generate the summarization output

outputs = model.generate(

inputs,

max_length=150,

min_length=40,

length_penalty=2.0,

num_beams=4,

early_stopping=True)

# just for debugging

print(outputs)

print(tokenizer.decode(outputs[0]))Output:

tensor([[ 0, 8, 1158, 2162, 8, 8999, 16, 1762, 3, 5,

34, 19, 8, 166, 1871, 21, 321, 13, 135, 3,

5, 8, 1871, 19, 8, 2214, 564, 13, 25045, 16948,

31, 7, 28574, 18573, 6, 113, 3977, 16, 1673, 3,

5]])the couple announced the pregnancy in January. it is the first baby for both of them.

the baby is the middle name of Timberlake's maternal grandfather, who died in 2012.Excellent, the output looks concise and is newly generated with a new summarizing style.

As you can see, the model generated an entirely new summarized text that does not belong to the original text. But how can we objectively evaluate the quality of these summaries? One commonly used metric is the Bilingual Evaluation Understudy (BLEU) score. Developed for evaluating machine translations, BLEU has also been found effective for assessing text summarization models.

The BLEU score measures how many words or phrases in the machine-generated summary match those in a reference summary created by a human. Scores range from 0 to 1, with 1 indicating a perfect match with the reference. Although the BLEU score is not perfect and does not account for all aspects of text quality (such as coherence or style), it provides a quantitative way to compare different summarization models or to evaluate improvements to a single model over time. Check this tutorial for more details about the BLEU score in Python.

Going to the most exciting part, the parameters passed to model.generate() method are:

max_length: The maximum number of tokens to generate, we have specified a total of 150. You can change that if you want.min_length: This is the minimum number of tokens to generate. If you look closely at the tensor output, you'll count a total of 41 tokens, so it respected what we've specified, 40. Note that this will also work if you set it to another task, such as English to German translation.length_penalty: Exponential penalty to the length, 1.0 means no penalty. Increasing this parameter will increase the size of the output text.num_beams: Specifying this parameter will lead the model to use beam search instead of greedy search, settingnum_beamsto 4, will allow the model to lookahead for four possible words (1 in the case of greedy search), to keep the most likely 4 of hypotheses at each time step, and choosing the one that has the overall highest probability.early_stopping: We set it toTrueso that generation is finished when all beam hypotheses reach the end of the string token (EOS).

We then the decode() method from the tokenizer to convert the tensor back to human-readable text.

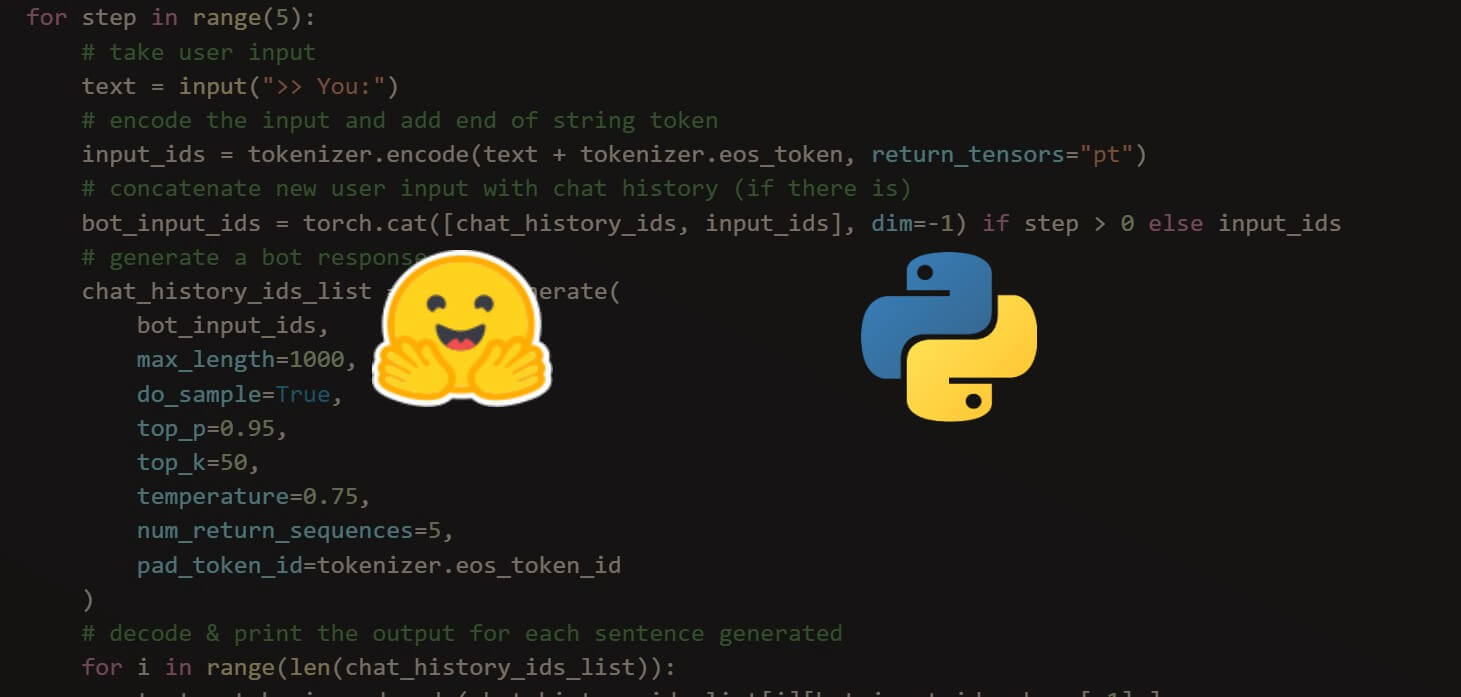

Learn also: Conversational AI Chatbot with Transformers in Python.

Conclusion

There are a lot of other parameters to tweak in model.generate() method. I highly encourage you to check this tutorial from the HuggingFace blog.

Alright, that's it for this tutorial. You've learned two ways to use HuggingFace's transformers library to perform text summarization. Check out the documentation here.

Check the complete code of the tutorial here.

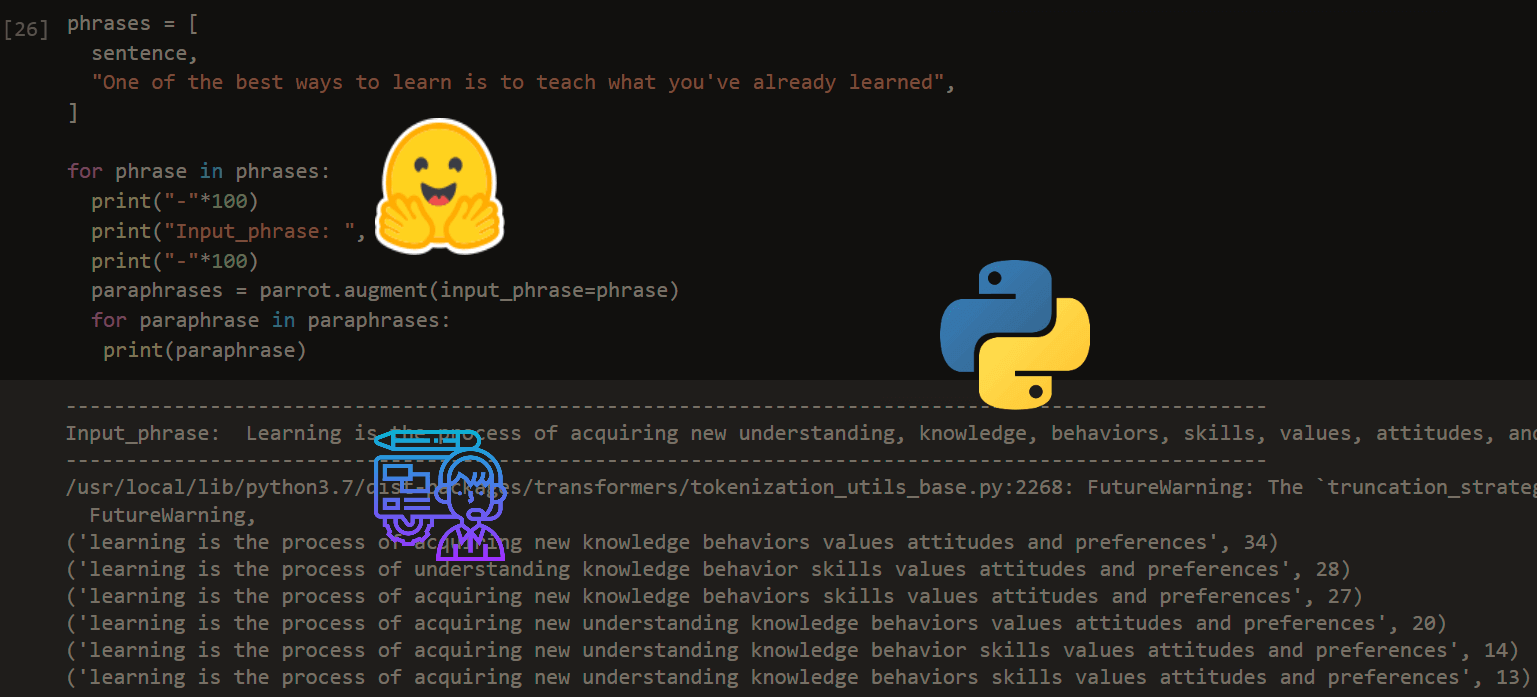

Related: How to Paraphrase Text using Transformers in Python.

Happy Learning ♥

Just finished the article? Why not take your Python skills a notch higher with our Python Code Assistant? Check it out!

View Full Code Switch My Framework

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!