Struggling with multiple programming languages? No worries. Our Code Converter has got you covered. Give it a go!

Search results change every day. So do review scores. If you want to stay on top of what Google shows for your brand, business, or competitors, Python can help. You can build a scraper that checks Google search results or review ratings and keeps track of changes over time.

This is especially useful for SEO, online reputation, and review monitoring. It’s also a great way to get hands-on with Python.

Let’s walk through a simple guide on how to scrape search results and reviews, refresh that data, and track updates.

Why This Matters

Search rankings change every time Google updates its index. Your page might show up at #1 today and be gone tomorrow.

Reviews also change fast. A restaurant could go from 4.8 to 3.9 stars in a week due to review bombing. A bad news story can send negative articles to the top of search.

By scraping and logging data daily or weekly, you can:

- Spot rising trends

- Catch bad content early

- Measure review changes

- Build alerts when something major shifts

Review management is also tied to reputation. And if your score drops from spammy reviews, you may want to investigate or even try to delete bad reviews using platform tools.

Tools You’ll Need

To follow along, make sure you have:

- Python 3.x installed

requests,beautifulsoup4, andpandaslibraries- A basic understanding of HTML and CSS selectors

- Optionally:

scheduleorcronfor automation

To install the required packages:

$ pip install requests beautifulsoup4 pandasScraping Google Search Results

Let’s start by grabbing the top search results for a query:

import requests

from bs4 import BeautifulSoup

def scrape_google(query):

headers = {

"User-Agent": "Mozilla/5.0"

}

search_url = f"https://www.google.com/search?q={query}&hl=en"

response = requests.get(search_url, headers=headers)

soup = BeautifulSoup(response.text, "html.parser")

results = []

for g in soup.find_all('div', class_='tF2Cxc'):

title = g.find('h3')

link = g.find('a')['href']

if title and link:

results.append({

"title": title.text,

"link": link

})

return results

# Try it with:

for result in scrape_google("best pizza in Chicago"):

print(result)

This will print the current top results for that search.

Scraping Google Reviews (Using Google Maps URLs)

Google reviews are tougher, but you can grab basic info from a Google Maps business page.

You’ll need a direct URL to the place’s Google Maps page. The review content is rendered by JavaScript, but the page source includes enough info for scraping average ratings.

def scrape_review_summary(place_url):

headers = {

"User-Agent": "Mozilla/5.0"

}

response = requests.get(place_url, headers=headers)

soup = BeautifulSoup(response.text, "html.parser")

if "Rated" in response.text:

start = response.text.find("Rated") + 6

rating = response.text[start:start+4].strip()

return rating

else:

return "Not Found"It’s basic, but enough for tracking average review score over time.

You can also use browser automation tools like Selenium or Playwright to get full review text and metadata. That requires more setup.

Save and Compare Results Over Time

Once you have scraped results, save them into a CSV file.

import pandas as pd

from datetime import datetime

def save_results(query, results):

today = datetime.now().strftime("%Y-%m-%d")

df = pd.DataFrame(results)

df['date'] = today

filename = f"{query.replace(' ', '_')}_results.csv"

try:

existing = pd.read_csv(filename)

updated = pd.concat([existing, df])

except FileNotFoundError:

updated = df

updated.to_csv(filename, index=False)

Now, every time you run the scraper, it will append the new data with today’s date.

You can do the same with review scores to track rating changes.

Schedule It to Run Daily

You can automate this with Python’s schedule package or a cron job.

Here’s an example using schedule:

import schedule

import time

def job():

query = "python programming tutorial"

results = scrape_google(query)

save_results(query, results)

print("Scraped and saved.")

schedule.every().day.at("10:00").do(job)

while True:

schedule.run_pending()

time.sleep(1)

Now it runs once a day at 10 AM and stores the data.

Spot Changes in Rankings or Ratings

Once you’ve logged some data, you can compare it easily.

Here’s how to compare changes in Google search positions:

def track_changes(filename):

df = pd.read_csv(filename)

recent = df[df['date'] == df['date'].max()]

previous = df[df['date'] == df['date'].unique()[-2]]

for i, row in recent.iterrows():

title = row['title']

old_rank = previous[previous['title'] == title].index.min()

new_rank = i

print(f"{title}: Moved from {old_rank} to {new_rank}")

Or track review rating changes:

def compare_ratings(file):

df = pd.read_csv(file)

last_two = df.tail(2)

if len(last_two) == 2:

old = float(last_two.iloc[0]['rating'])

new = float(last_two.iloc[1]['rating'])

diff = new - old

print(f"Rating changed by {diff}")

Use Cases for This Script

- Monitor your own business reputation

- Track a competitor’s reviews and visibility

- Measure SEO progress for specific keywords

- Watch for spammy content that affects your search results

- Alert your team if your Google score drops suddenly

This is especially useful if you’re working to clean up your brand or trying to delete bad reviews that hurt your trust score.

Final Thoughts

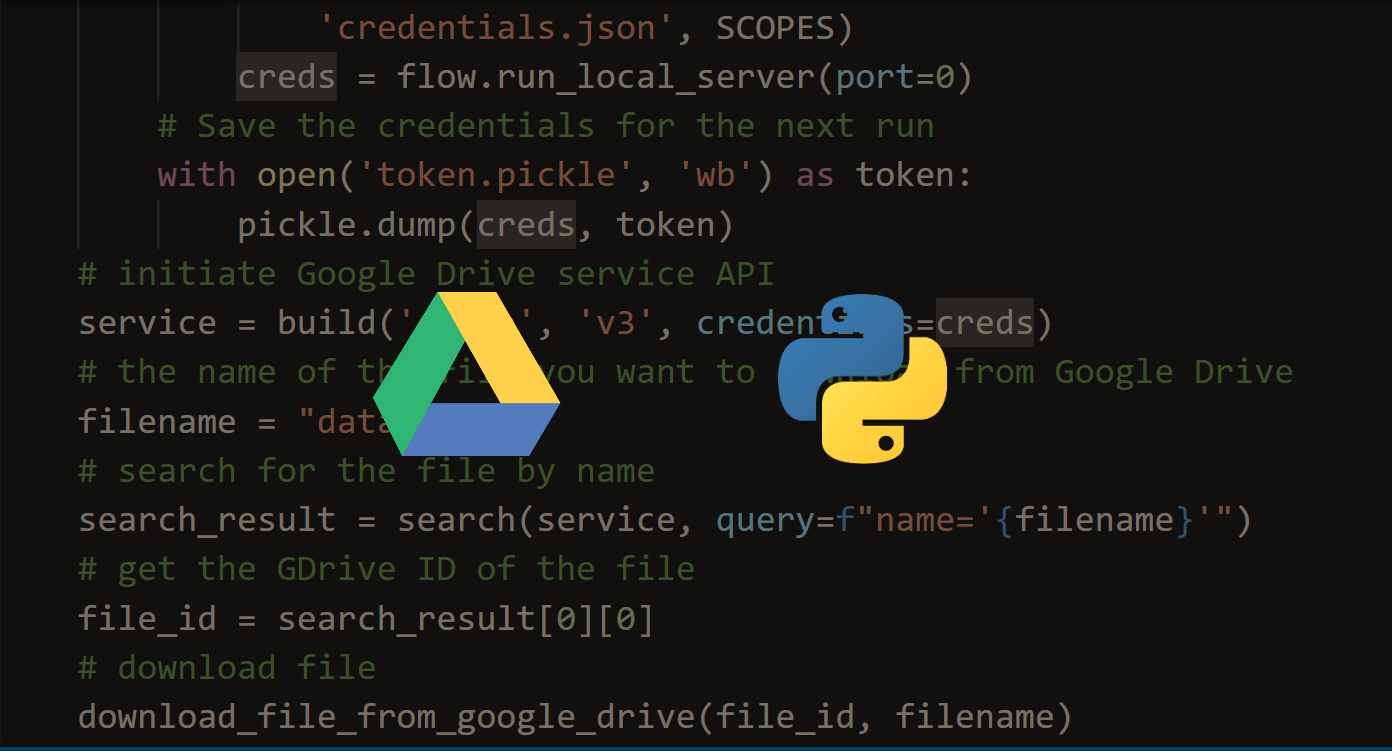

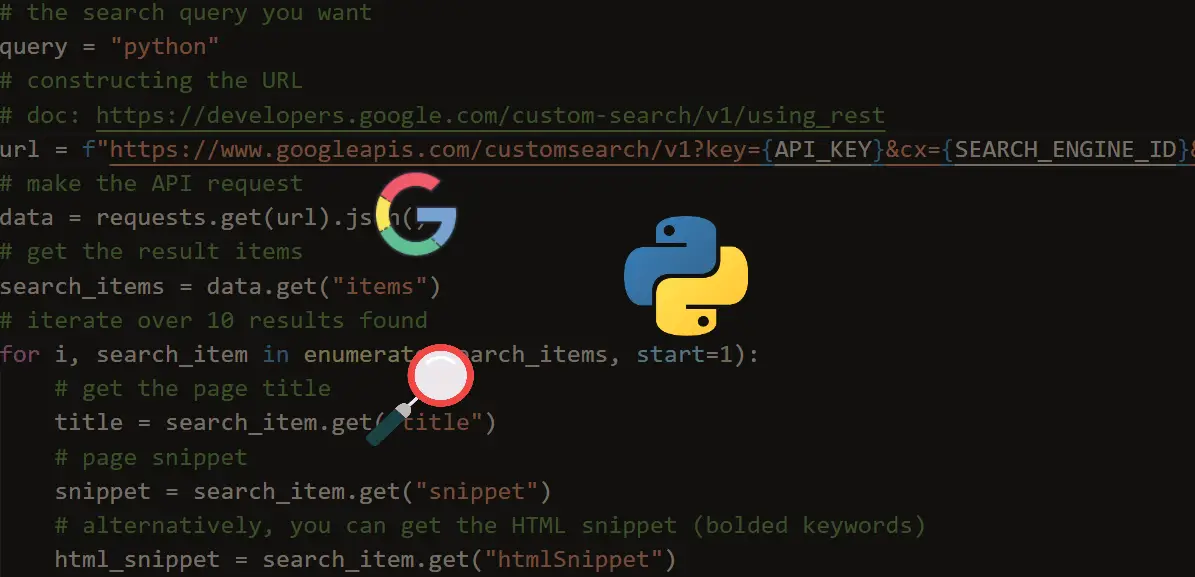

It's important to be aware that directly scraping Google search results, as shown here, can be unreliable due to frequent changes and may violate Google's Terms of Service. The recommended and stable approach is to utilize the official Google Custom Search Engine (CSE) API, which we explain how to use in our dedicated article here.

Use Python to gather the data. Automate it to stay consistent. Watch how your online presence changes, and take action when things start to slip.

Reputation isn’t static—and neither are search results. Track them like you track your goals.

Liked what you read? You'll love what you can learn from our AI-powered Code Explainer. Check it out!

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!