Welcome! Meet our Python Code Assistant, your new coding buddy. Why wait? Start exploring now!

Paraphrasing is the process of coming up with someone else's ideas in your own words. To paraphrase a text, you have to rewrite it without changing its meaning.

In this tutorial, we will explore different pre-trained transformer models for automatically paraphrasing text using the Huggingface transformers library in Python.

Note that if you want to just paraphrase your text, then there are online tools for that, such as the QuestGenius text paraphraser.

To get started, let's install the required libraries first:

$ pip install transformers sentencepiece sacremosesImporting everything from transformers library:

from transformers import *Pegasus Transformer

In this section, we'll use the Pegasus transformer architecture model that was fine-tuned for paraphrasing instead of summarization. To instantiate the model, we need to use PegasusForConditionalGeneration as it's a form of text generation:

model = PegasusForConditionalGeneration.from_pretrained("tuner007/pegasus_paraphrase")

tokenizer = PegasusTokenizerFast.from_pretrained("tuner007/pegasus_paraphrase")Next, let's make a general function that takes a model, its tokenizer, the target sentence and returns the paraphrased text:

def get_paraphrased_sentences(model, tokenizer, sentence, num_return_sequences=5, num_beams=5):

# tokenize the text to be form of a list of token IDs

inputs = tokenizer([sentence], truncation=True, padding="longest", return_tensors="pt")

# generate the paraphrased sentences

outputs = model.generate(

**inputs,

num_beams=num_beams,

num_return_sequences=num_return_sequences,

)

# decode the generated sentences using the tokenizer to get them back to text

return tokenizer.batch_decode(outputs, skip_special_tokens=True)We also add the possibility of generating multiple paraphrased sentences by passing num_return_sequences to the model.generate() method.

We also set num_beams so we generate the paraphrasing using beam search. Setting it to 5 will allow the model to look ahead for five possible words to keep the most likely hypothesis at each time step and choose the one that has the overall highest probability.

I highly suggest you check this blog post to learn more about the parameters of the model.generate() method.

Let's use the function now:

sentence = "Learning is the process of acquiring new understanding, knowledge, behaviors, skills, values, attitudes, and preferences."

get_paraphrased_sentences(model, tokenizer, sentence, num_beams=10, num_return_sequences=10)We set num_beams to 10 and prompt the model to generate ten different sentences; here is the output:

['Learning involves the acquisition of new understanding, knowledge, behaviors, skills, values, attitudes, and preferences.',

'Learning is the acquisition of new understanding, knowledge, behaviors, skills, values, attitudes, and preferences.',

'The process of learning is the acquisition of new understanding, knowledge, behaviors, skills, values, attitudes, and preferences.',

'Gaining new understanding, knowledge, behaviors, skills, values, attitudes, and preferences is the process of learning.',

'New understanding, knowledge, behaviors, skills, values, attitudes, and preferences are acquired through learning.',

'Learning is the acquisition of new understanding, knowledge, behaviors, skills, values, attitudes and preferences.',

'The process of learning is the acquisition of new understanding, knowledge, behaviors, skills, values, attitudes and preferences.',

'New understanding, knowledge, behaviors, skills, values, attitudes, and preferences can be acquired through learning.',

'New understanding, knowledge, behaviors, skills, values, attitudes, and preferences are what learning is about.',

'Gaining new understanding, knowledge, behaviors, skills, values, attitudes, and preferences is a process of learning.']Outstanding results! Most of the generations are accurate and can be used. You can try different sentences from your mind and see the results yourself.

You can check the model card here.

T5 Transformer

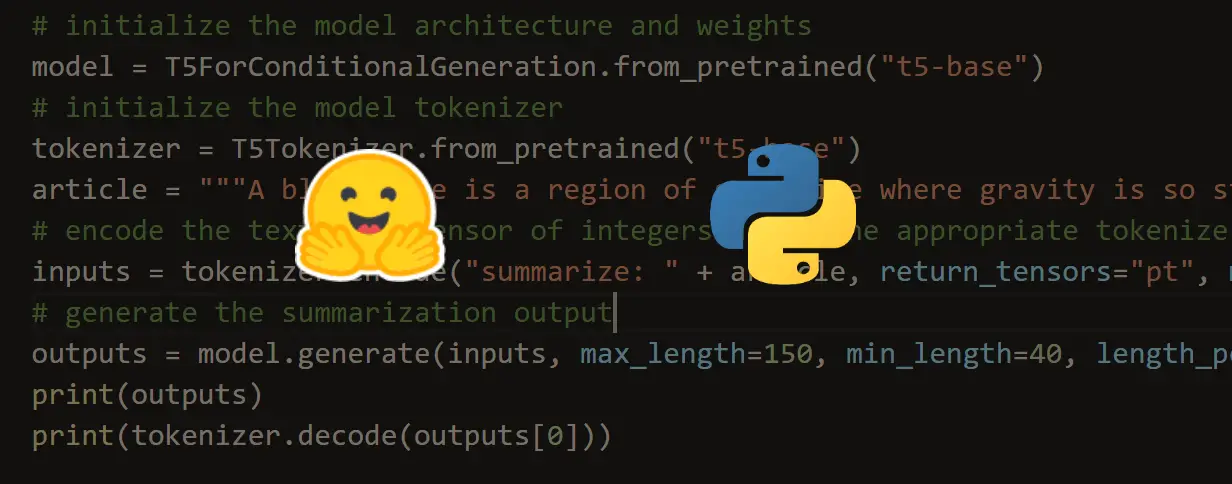

This section will explore the T5 architecture model that was fine-tuned on the PAWS dataset. PAWS consists of 108,463 human-labeled and 656k noisily labeled pairs. Let's load the model and the tokenizer:

tokenizer = AutoTokenizer.from_pretrained("Vamsi/T5_Paraphrase_Paws")

model = AutoModelForSeq2SeqLM.from_pretrained("Vamsi/T5_Paraphrase_Paws")Let's use our previously defined function:

get_paraphrased_sentences(model, tokenizer, "One of the best ways to learn is to teach what you've already learned")Output:

["One of the best ways to learn is to teach what you've already learned.",

'One of the best ways to learn is to teach what you have already learned.',

'One of the best ways to learn is to teach what you already know.',

'One of the best ways to learn is to teach what you already learned.',

"One of the best ways to learn is to teach what you've already learned."]These are promising results too. However, if you get some not-so-good paraphrased text, you can append the input text with "paraphrase: ", as T5 was intended for multiple text-to-text NLP tasks such as machine translation, text summarization, and more. It was pre-trained and fine-tuned like that.

You can check the model card here.

Parrot Paraphraser

Finally, let's use a fine-tuned T5 model architecture called Parrot. It is an augmentation framework built to speed-up training NLU models. The author of the fine-tuned model did a small library to perform paraphrasing. Let's install it:

$ pip install git+https://github.com/PrithivirajDamodaran/Parrot_Paraphraser.gitImporting it and initializing the model:

from parrot import Parrot

parrot = Parrot()This will download the models' weights and the tokenizer, give it some time, and it'll finish in a few seconds to several minutes, depending on your Internet connection.

This library uses more than one model. It uses one model for paraphrasing, one for calculating adequacy, another for calculating fluency, and the last for diversity.

Let's use the previous sentences and another one and see the results:

phrases = [

sentence,

"One of the best ways to learn is to teach what you've already learned",

"Paraphrasing is the process of coming up with someone else's ideas in your own words"

]

for phrase in phrases:

print("-"*100)

print("Input_phrase: ", phrase)

print("-"*100)

paraphrases = parrot.augment(input_phrase=phrase)

if paraphrases:

for paraphrase in paraphrases:

print(paraphrase)With this library, we simply use the parrot.augment() method and pass the sentence in a text form, it returns several candidate paraphrased texts. Check the output:

----------------------------------------------------------------------------------------------------

Input_phrase: Learning is the process of acquiring new understanding, knowledge, behaviors, skills, values, attitudes, and preferences.

----------------------------------------------------------------------------------------------------

('learning is the process of acquiring new knowledge behaviors skills values attitudes and preferences', 27)

('learning is the process of acquiring new understanding knowledge behaviors skills values attitudes and preferences', 13)

----------------------------------------------------------------------------------------------------

Input_phrase: One of the best ways to learn is to teach what you've already learned

----------------------------------------------------------------------------------------------------

('one of the best ways to learn is to teach what you know', 29)

('one of the best ways to learn is to teach what you already know', 21)

('one of the best ways to learn is to teach what you have already learned', 15)

----------------------------------------------------------------------------------------------------

Input_phrase: Paraphrasing is the process of coming up with someone else's ideas in your own words

----------------------------------------------------------------------------------------------------

("paraphrasing is the process of coming up with a person's ideas in your own words", 23)

("paraphrasing is the process of coming up with another person's ideas in your own words", 23)

("paraphrasing is the process of coming up with another's ideas in your own words", 22)

("paraphrasing is the process of coming up with someone's ideas in your own words", 17)

("paraphrasing is the process of coming up with somebody else's ideas in your own words", 15)

("paraphrasing is the process of coming up with someone else's ideas in your own words", 12)The number accompanied with each sentence is the diversity score. The higher the value, the more diverse the sentence from the original.

You can check the Parrot Paraphraser repository here.

Conclusion

Alright! That's it for the tutorial. Hopefully, you have explored the most valuable ways to perform automatic text paraphrasing using transformers and AI in general.

You can get the complete code here or the Colab notebook here.

Here are some other related NLP tutorials:

- Named Entity Recognition using Transformers and Spacy in Python.

- Fine-tuning BERT for Semantic Textual Similarity with Transformers in Python.

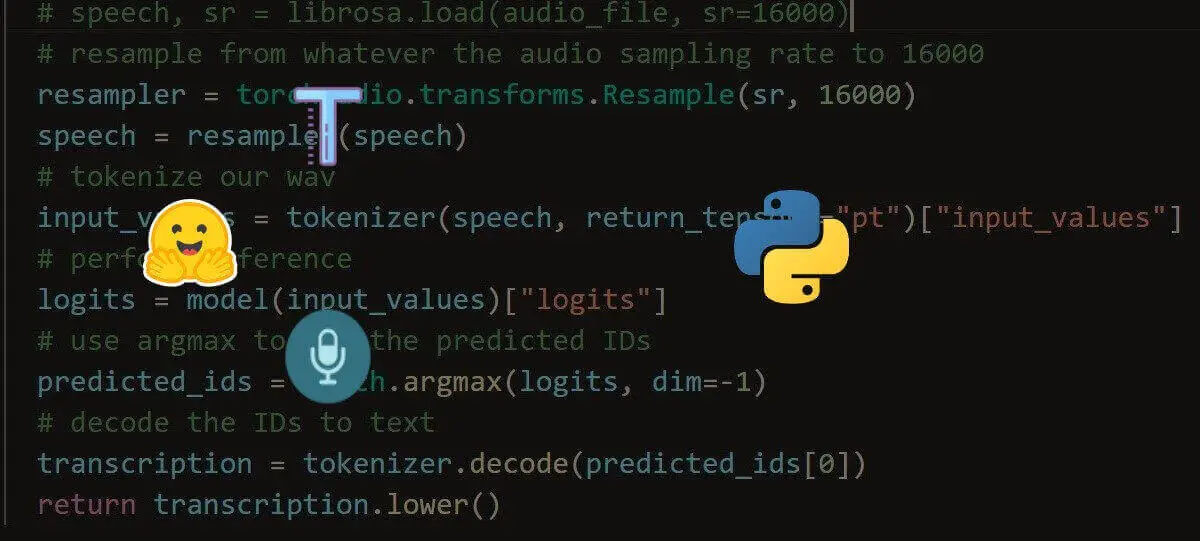

- Speech Recognition using Transformers in Python.

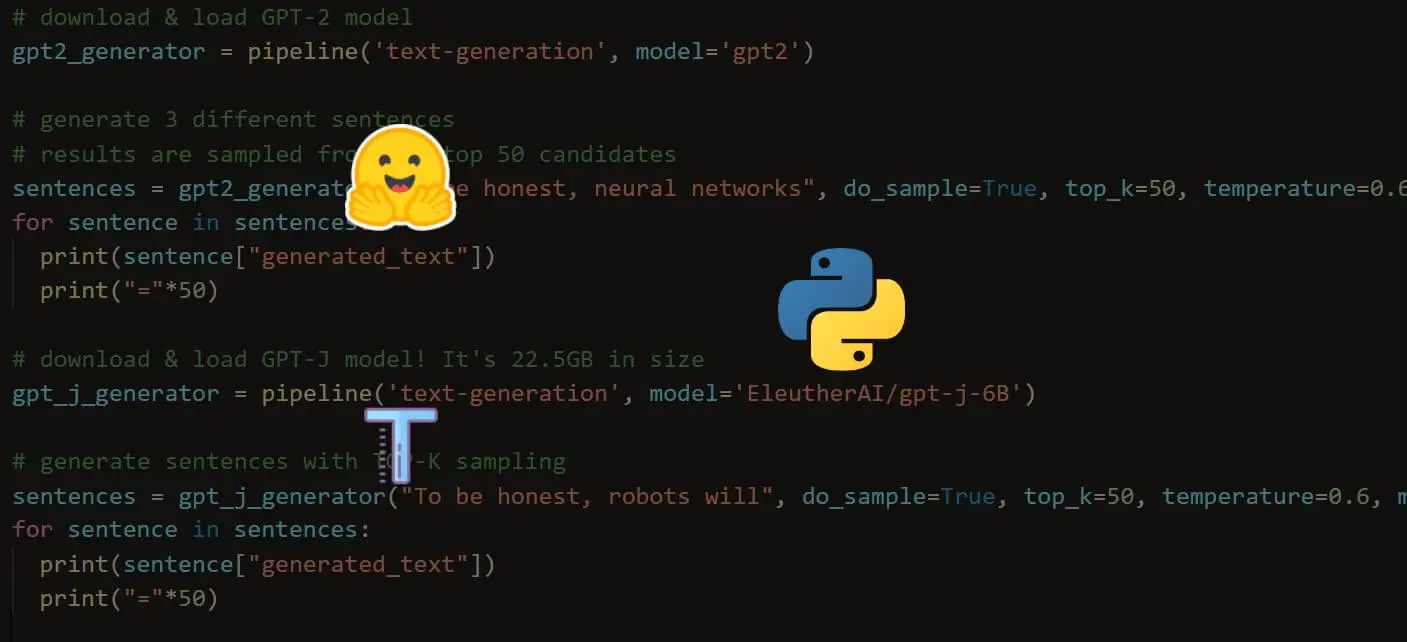

- Text Generation with Transformers in Python.

Happy learning ♥

Take the stress out of learning Python. Meet our Python Code Assistant – your new coding buddy. Give it a whirl!

View Full Code Analyze My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!