Want to code faster? Our Python Code Generator lets you create Python scripts with just a few clicks. Try it now!

Introduction

Imagine you had magical powers to generate similar images to any image you already have. Wouldn't that be great? You could use it for many things like making new similar images and editing existing ones. Fortunately, you can do this with Latent diffusion models!

In this tutorial, we will see how to make our own image-to-image diffusion model using depth-to-image models and use an existing model from the diffusers library. We'll start by manually making our own custom pipeline with Python to make sure we understand how it's built. After that, we'll use the pipeline prepared by Huggingface.

If you want to generate images from scratch using prompts, then check this tutorial.

Table of content:

Note: The code of this article can be found in this Google Colab notebook.

Before diving into the details, let's look at some examples of what we can achieve with these models.

Input Image:

Positive prompt: “World war, aesthetic”.

Negative prompt: “Bad looking, deformed, wholesome”.

Output:

The AI model reads the positive prompt and tries to make an image mentioned in it. The negative prompt tells the AI model what not to do or not to include.

Input Image:

Positive prompt: “Beautiful anime landscape”

Negative prompt: “Bad, deformed, ugly”

Output:

Aren’t these images just lovely?!

Latent diffusion models are powerful generative models. Inspired by the physical diffusion process, these models use a series of denoising steps on randomly generated noise to create an image. For a more detailed working of the latent diffusion models, you can refer to this article.

Image-to-image models are AI models that translate an image from one domain to another. Diffusion models have made significant strides in this area. These models generally work by adding noise to the reference image during the forward diffusion process. Then, they perform the backward diffusion process to remove the noise and reconstruct an image in the target domain.

Depth-to-image models build upon this concept by generating depth information for the images and using this extra information to perform image diffusion. For example, if an object is far back in the background, its pixels will have a higher depth value.

Limitations of the Model

However, it is extremely difficult to train these models, requiring significant computational resources. Even using pre-trained models requires access to high-end GPUs. Fortunately, we're in luck, as we will run the code in Google Colab, which provides access to consumer GPUs.

Code

Before we start writing the code on Google Colab, make sure you have GPU enabled. To do so, go to Runtime, click Change Runtime Type, and select GPU from the hardware accelerator option.

Part 1: Manually Implementing the depth2img Pipeline using Pre-trained Models

Note: The code in this section has been heavily inspired by the original diffusers library implementation. You can check it out at pipeline_stable_diffusion_depth2img.py

Before we begin to write the core part of the code, let's first install and load the necessary libraries to run the model.

%pip install --quiet --upgrade diffusers transformers scipy ftfy

%pip install --quiet --upgrade accelerate

import numpy as np

from tqdm import tqdm

from PIL import Image

import torch

from torch import autocast

from transformers import CLIPTextModel, CLIPTokenizer

from transformers import DPTForDepthEstimation, DPTFeatureExtractor

from diffusers import AutoencoderKL, UNet2DConditionModel

from diffusers.schedulers.scheduling_pndm import PNDMSchedulerNow, for our pipeline, we need helper methods for each of the following:

- Get text embeddings from a prompt

- Get image latents from an image (i.e., do the encoding process)

- Get image from image latents (i.e., do the decoding process)

- Get depth masks from an image

- Run the entire image pipeline

We have already defined the first three methods in the previous tutorial. So we can extend the same class and implement the function to get the depth masks of our reference image and run the pipeline.

The code for the base class is as follows:

class DiffusionPipeline:

def __init__(self,

vae,

tokenizer,

text_encoder,

unet,

scheduler):

self.vae = vae

self.tokenizer = tokenizer

self.text_encoder = text_encoder

self.unet = unet

self.scheduler = scheduler

self.device = 'cuda' if torch.cuda.is_available() else 'cpu'

def get_text_embeds(self, text):

# tokenize the text

text_input = self.tokenizer(text,

padding='max_length',

max_length=tokenizer.model_max_length,

truncation=True,

return_tensors='pt')

# embed the text

with torch.no_grad():

text_embeds = self.text_encoder(text_input.input_ids.to(self.device))[0]

return text_embeds

def get_prompt_embeds(self, prompt):

if isinstance(prompt, str):

prompt = [prompt]

# get conditional prompt embeddings

cond_embeds = self.get_text_embeds(prompt)

# get unconditional prompt embeddings

uncond_embeds = self.get_text_embeds([''] * len(prompt))

# concatenate the above 2 embeds

prompt_embeds = torch.cat([uncond_embeds, cond_embeds])

return prompt_embeds

def decode_img_latents(self, img_latents):

img_latents = 1 / self.vae.config.scaling_factor * img_latents

with torch.no_grad():

img = self.vae.decode(img_latents).sample

img = (img / 2 + 0.5).clamp(0, 1)

img = img.cpu().permute(0, 2, 3, 1).float().numpy()

return img

def transform_img(self, img):

# scale images to the range [0, 255] and convert to int

img = (img * 255).round().astype('uint8')

# convert to PIL Image objects

img = [Image.fromarray(i) for i in img]

return img

def encode_img_latents(self, img, latent_timestep):

if not isinstance(img, list):

img = [img]

img = np.stack([np.array(i) for i in img], axis=0)

# scale images to the range [-1, 1]

img = 2 * ((img / 255.0) - 0.5)

img = torch.from_numpy(img).float().permute(0, 3, 1, 2)

img = img.to(self.device)

# encode images

img_latents_dist = self.vae.encode(img)

img_latents = img_latents_dist.latent_dist.sample()

# scale images

img_latents = self.vae.config.scaling_factor * img_latents

# add noise to the latents

noise = torch.randn(img_latents.shape).to(self.device)

img_latents = self.scheduler.add_noise(img_latents, noise, latent_timestep)

return img_latents

Now we will define a new class and extend the previously defined class as follows:

class Depth2ImgPipeline(DiffusionPipeline):

def __init__(self,

vae,

tokenizer,

text_encoder,

unet,

scheduler,

depth_feature_extractor,

depth_estimator):

super().__init__(vae, tokenizer, text_encoder, unet, scheduler)

self.depth_feature_extractor = depth_feature_extractor

self.depth_estimator = depth_estimatorLet us also add a method to get a depth mask for an image:

def get_depth_mask(self, img):

if not isinstance(img, list):

img = [img]

width, height = img[0].size

# pre-process the input image and get its pixel values

pixel_values = self.depth_feature_extractor(img, return_tensors="pt").pixel_values

# use autocast for automatic mixed precision (AMP) inference

with autocast('cuda'):

depth_mask = self.depth_estimator(pixel_values).predicted_depth

# get the depth mask

depth_mask = torch.nn.functional.interpolate(depth_mask.unsqueeze(1),

size=(height//8, width//8),

mode='bicubic',

align_corners=False)

# scale the mask to range [-1, 1]

depth_min = torch.amin(depth_mask, dim=[1, 2, 3], keepdim=True)

depth_max = torch.amax(depth_mask, dim=[1, 2, 3], keepdim=True)

depth_mask = 2.0 * (depth_mask - depth_min) / (depth_max - depth_min) - 1.0

depth_mask = depth_mask.to(self.device)

# replicate the mask for classifier free guidance

depth_mask = torch.cat([depth_mask] * 2)

return depth_mask

Here we first use the feature extractor to preprocess the image by normalizing, rescaling, resizing, etc., to get the final pixel values. These values are then passed to a pre-trained depth-estimating model. Since the model is quite large, we run the model under automatic mixed precision (AMP) inference.

We then interpolate this depth mask to a size of 1/8th of the original image using bicubic interpolation.

Note: We can use any interpolation, but bicubic interpolation tends to perform the best.

We then scale these values of the depth mask to a range from -1 to 1. Also, since we are doing classifier-free guidance, we add the same mask twice (one each for the conditional and unconditional latents).

Now let's make a method to perform the backward diffusion process. This method is where we will pass the prompt embeddings and the depth mask we just created to get the output image:

def denoise_latents(self,

img,

prompt_embeds,

depth_mask,

strength,

num_inference_steps=50,

guidance_scale=7.5,

height=512, width=512):

# clip the value of strength to ensure strength lies in [0, 1]

strength = max(min(strength, 1), 0)

# compute timesteps

self.scheduler.set_timesteps(num_inference_steps)

init_timestep = int(num_inference_steps * strength)

t_start = num_inference_steps - init_timestep

timesteps = self.scheduler.timesteps[t_start: ]

num_inference_steps = num_inference_steps - t_start

latent_timestep = timesteps[:1].repeat(1)

latents = self.encode_img_latents(img, latent_timestep)

# use autocast for automatic mixed precision (AMP) inference

with autocast('cuda'):

for i, t in tqdm(enumerate(timesteps)):

latent_model_input = torch.cat([latents] * 2)

latent_model_input = torch.cat([latent_model_input, depth_mask], dim=1)

# predict noise residuals

with torch.no_grad():

noise_pred = self.unet(latent_model_input, t, encoder_hidden_states=prompt_embeds)['sample']

# separate predictions for unconditional and conditional outputs

noise_pred_uncond, noise_pred_text = noise_pred.chunk(2)

# perform guidance

noise_pred = noise_pred_uncond + guidance_scale * (noise_pred_text - noise_pred_uncond)

# remove the noise from the current sample i.e. go from x_t to x_{t-1}

latents = self.scheduler.step(noise_pred, t, latents)['prev_sample']

return latentsIn the code above, strength controls the variability of the output image. A higher strength value means a more varying output image. A strength of 1 essentially almost ignores the input image.

num_inference_steps is the total number of diffusion steps. We compute the starting timestep, i.e., t_start, which is mathematically equivalent to (1 - strength) * num_inference_steps. So the higher the strength, the lower the t_start will be; hence, there will be more inference steps that actually happen.

We then encode the image and do the backward diffusion process on the latents we receive. In each step, we replicate the latents from the previous step (because we are doing classifier-free guidance) and concatenate the image depth masks. The remaining process remains unchanged, i.e., we pass this concatenated latent input to a U-Net model, get the noise predictions, decompose the predictions into the unconditional and text noise predictions, and do classifier guidance to get the overall noise prediction and pass this to the scheduler to get the latents for the next time step.

Now, all we need is to make a pipeline that will use all the previous functions we have defined.

def __call__(self,

prompt,

img,

strength=0.8,

num_inference_steps=50,

guidance_scale=7.5,

height=512, width=512):

prompt_embeds = self.get_prompt_embeds(prompt)

depth_mask = self.get_depth_mask(img)

latents = self.denoise_latents(img,

prompt_embeds,

depth_mask,

strength,

num_inference_steps,

guidance_scale,

height, width)

img = self.decode_img_latents(latents)

img = self.transform_img(img)

return imgHere, we get the prompt embeddings and the depth mask used to denoise the reference image. The denoised image latents are then decoded and transformed for a suitable PIL image format. Phew, That's all!

Note: An obvious but noteworthy difference between the depth-to-image and text-to-image models is that the U-Net, in this case, expects 5 channels instead of 4. Also, the prompt embeddings have the shape (2, 77, 1024), meaning they are embedded in a 1024 dimension space with a maximum number of tokens as 77!

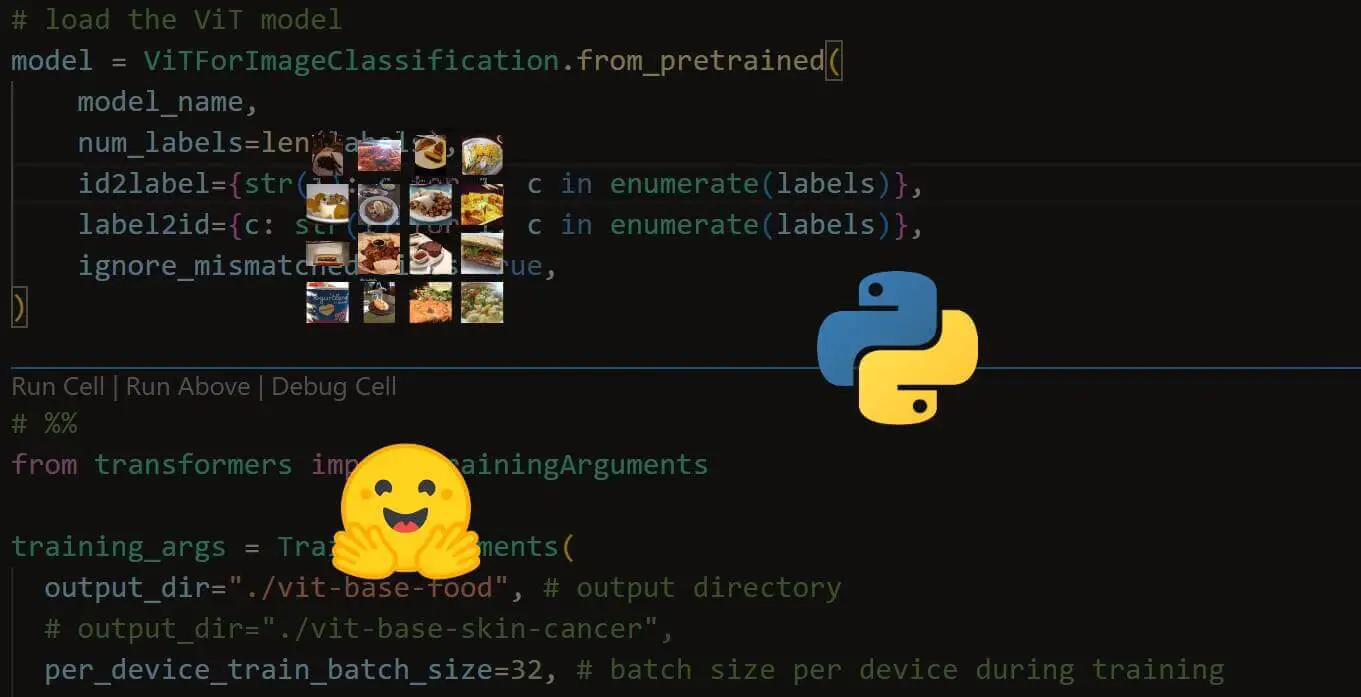

Now let's download each required component from Hugging Face and see if our code works:

device = 'cuda'

# Load autoencoder

vae = AutoencoderKL.from_pretrained('stabilityai/stable-diffusion-2-depth', subfolder='vae').to(device)

# Load tokenizer and the text encoder

tokenizer = CLIPTokenizer.from_pretrained('stabilityai/stable-diffusion-2-depth', subfolder='tokenizer')

text_encoder = CLIPTextModel.from_pretrained('stabilityai/stable-diffusion-2-depth', subfolder='text_encoder').to(device)

# Load UNet model

unet = UNet2DConditionModel.from_pretrained('stabilityai/stable-diffusion-2-depth', subfolder='unet').to(device)

# Load scheduler

scheduler = PNDMScheduler(beta_start=0.00085,

beta_end=0.012,

beta_schedule='scaled_linear',

num_train_timesteps=1000)# Load DPT Depth Estimator

depth_estimator = DPTForDepthEstimation.from_pretrained('stabilityai/stable-diffusion-2-depth', subfolder='depth_estimator')

# Load DPT Feature Extractor

depth_feature_extractor = DPTFeatureExtractor.from_pretrained('stabilityai/stable-diffusion-2-depth', subfolder='feature_extractor')Let us now create an instance of the pipeline.

depth2img = Depth2ImgPipeline(vae,

tokenizer,

text_encoder,

unet,

scheduler,

depth_feature_extractor,

depth_estimator)

Great, now we can finally test our model. Let's use the same example in the Hugging Face model card.

Example 1:

import urllib.parse as parse

import os

import requests

# a function to determine whether a string is a URL or not

def is_url(string):

try:

result = parse.urlparse(string)

return all([result.scheme, result.netloc, result.path])

except:

return False

# a function to load an image

def load_image(image_path):

if is_url(image_path):

return Image.open(requests.get(image_path, stream=True).raw)

elif os.path.exists(image_path):

return Image.open(image_path)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

img = load_image(url)

img

depth2img("two tigers", img)[0]

Let’s try the model on our own images!

Example 2:

img = load_image("image16.png")

img

Note: You can get the testing images of this tutorial at this link.

prompt = "A boulder with gemstones falling down a hill"

depth2img(prompt, img)[0]

Example 3:

img = load_image("image11.png").resize((512, 512))

img

prompt = "A futuristic city on the edge of space, a robotic bionic singularity portal, sci fi, utopian, tim hildebrandt, wayne barlowe, bruce pennington, donato giancola, larry elmore"

depth2img(prompt, img)[0]

Note: All the images used from now on were generated with AI as taught in this article. In case you want to generate something similar, I will also mention the prompts for the generated images. You can also download the images from this link and experiment!

Part 2: Using Pipeline from Hugging Face

Now that we know how to implement the model let us use the 🤗 pipeline directly (StableDiffusionDepth2ImgPipeline). Let's import all the relevant libraries needed:

import torch

import requests

from PIL import Image

from diffusers import StableDiffusionDepth2ImgPipelineLet us also make an instance of the pipeline.

pipe = StableDiffusionDepth2ImgPipeline.from_pretrained(

"stabilityai/stable-diffusion-2-depth",

torch_dtype=torch.float16,

).to("cuda")As a first example, I'm grabbing a real image from pexels:

img = load_image("https://images.pexels.com/photos/406152/pexels-photo-406152.jpeg?auto=compress&cs=tinysrgb&w=600")

img

Let's change the salad content:

prompt = "A salad with tomatoes and guanas chips mixed with ketchup and mustard and bay leaf and guacamole and onions and ketchup and luscious patty with sesame seeds and cashews and onions and ketchup, ethereal,"

pipe(prompt=prompt, image=img, negative_prompt=None, strength=0.7).images[0] Amazing! Let's add a negative prompt:

Amazing! Let's add a negative prompt:

prompt = "A salad with tomatoes and guanas chips mixed with ketchup and mustard and bay leaf and guacamole and onions and ketchup and luscious patty with sesame seeds and cashews and onions and ketchup, ethereal,"

n_prompt = "ugly, deformed, not detailed, bad architectures, blurred, too much blurred, motion blur"

pipe(prompt=prompt, image=img, negative_prompt=n_prompt, strength=0.7).images[0] Here's another example:

Here's another example:

img = load_image("image15.png")

img

Here’s an image without any negative prompts:

prompt = "Last remaining old man on earth"

pipe(prompt=prompt, image=img, negative_prompt=None, strength=0.7).images[0]

Here’s the same but with an additional negative prompt:

prompt = "Last remaining old man on earth"

n_prompt = "bad anatomy, ugly, wrinkles"

pipe(prompt=prompt, image=img, negative_prompt=n_prompt, strength=0.7).images[0]

Let's see how slowly increasing the strength parameter affects the image generation process:

img = load_image("image11.png")

img

Input:

prompt = "Futuristic city, modern, highly detailed, aesthetic, octane render, 8K, UHD, photoshopped"

n_prompt = "ugly, deformed, not detailed, bad architectures, blurred, too much blurred, motion blur"

pipe(prompt=prompt, image=img, negative_prompt=n_prompt, strength=0.1).images[0]

pipe(prompt=prompt, image=img, negative_prompt=n_prompt, strength=0.5).images[0]

pipe(prompt=prompt, image=img, negative_prompt=n_prompt, strength=0.9).images[0]

pipe(prompt=prompt, image=img, negative_prompt=n_prompt, strength=1).images[0]

As we increase the strength our output image keeps looking more futuristic. Hence, the strength parameter intuitively specifies the strength of our prompts!

Conclusion

In this article, we learned how to generate more similar images using depth-to-image models. We also implemented such a pipeline and tried the implementation in the 🤗 diffusers library.

Learn also: How to Generate Images from Text using Stable Diffusion in Python.

References

- How to Generate Images from Text using Stable Diffusion in Python

- The

pipeline_stable_diffusion_depth2img.pyscript stabilityai/stable-diffusion-2-depthModel card

Loved the article? You'll love our Code Converter even more! It's your secret weapon for effortless coding. Give it a whirl!

View Full Code Convert My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!