Unlock the secrets of your code with our AI-powered Code Explainer. Take a look!

Google Custom Search Engine (CSE) is a search engine that enables developers to include search in their applications, whether it's a desktop application, a website, or a mobile app.

Being able to track your ranking on Google is a handy tool, especially when you're a website owner, and you want to track your page ranking when you write an article or edit it.

In this tutorial, we will make a Python script that is able to get page ranking of your domain using CSE API. Before we dive into it, I need to make sure you have CSE API setup and ready to go, if that's not the case, please check the tutorial to get started with Custom Search Engine API in Python.

Once you have your search engine up and running, go ahead and install requests so we can make HTTP requests with ease:

pip3 install requestsOpen up a new Python and follow along. Let's start off by importing modules and defining our variables:

import requests

import urllib.parse as p

# get the API KEY here: https://developers.google.com/custom-search/v1/overview

API_KEY = "<INSERT_YOUR_API_KEY_HERE>"

# get your Search Engine ID on your CSE control panel

SEARCH_ENGINE_ID = "<INSERT_YOUR_SEARCH_ENGINE_ID_HERE>"

# target domain you want to track

target_domain = "thepythoncode.com"

# target keywords

query = "google custom search engine api python"Again, please check this tutorial in which I show you how to get API_KEY and SEARCH_ENGINE_ID. target_domain is the domain you want to search for and query is the target keyword. For instance, if you want to track stackoverflow.com for "convert string to int python" keywords, then you put them in target_domain and query respectively.

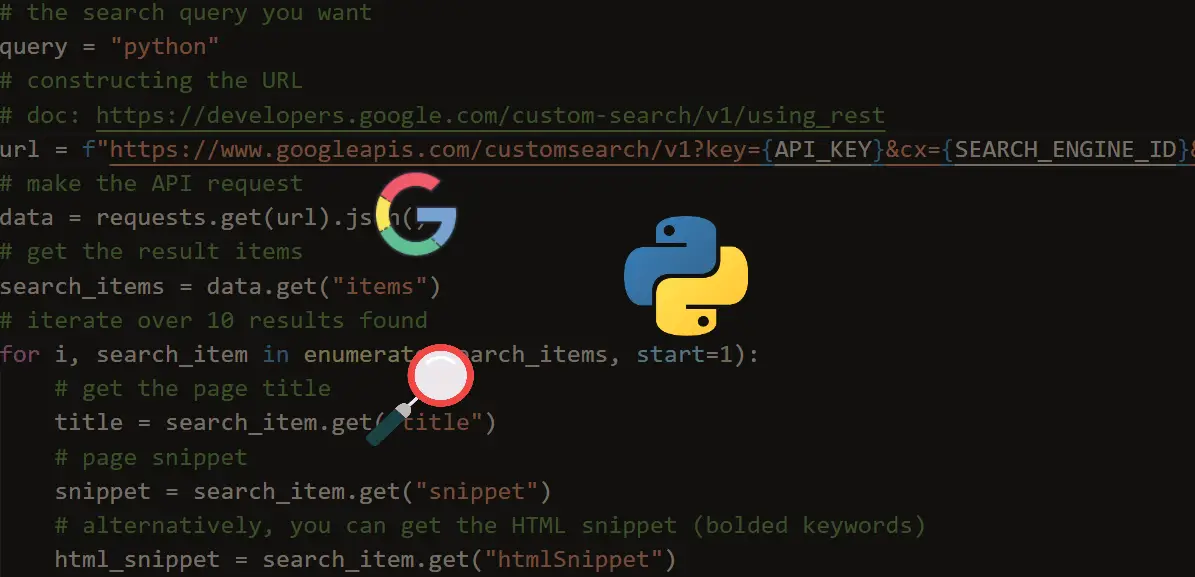

Now, CSE enables us to see the first 10 pages, each search page has 10 results, so 100 URLs in total to check, the below code block is responsible for iterating over each page and searching for the domain name in the results:

for page in range(1, 11):

print("[*] Going for page:", page)

# calculating start

start = (page - 1) * 10 + 1

# make API request

url = f"https://www.googleapis.com/customsearch/v1?key={API_KEY}&cx={SEARCH_ENGINE_ID}&q={query}&start={start}"

data = requests.get(url).json()

search_items = data.get("items")

# a boolean that indicates whether `target_domain` is found

found = False

for i, search_item in enumerate(search_items, start=1):

# get the page title

title = search_item.get("title")

# page snippet

snippet = search_item.get("snippet")

# alternatively, you can get the HTML snippet (bolded keywords)

html_snippet = search_item.get("htmlSnippet")

# extract the page url

link = search_item.get("link")

# extract the domain name from the URL

domain_name = p.urlparse(link).netloc

if domain_name.endswith(target_domain):

# get the page rank

rank = i + start - 1

print(f"[+] {target_domain} is found on rank #{rank} for keyword: '{query}'")

print("[+] Title:", title)

print("[+] Snippet:", snippet)

print("[+] URL:", link)

# target domain is found, exit out of the program

found = True

break

if found:

breakSo after we make an API request to each page, we iterate over the result and extract the domain name using urllib.parse.urlparse() function and see if it matches our target_domain, the reason we're using endswith() function instead of double equals (==) is because we don't want to miss URLs that starts with www or other subdomains.

The script is done, here is my output of the execution (after replacing my API key and Search Engine ID, of course):

[*] Going for page: 1

[+] thepythoncode.com is found on rank #3 for keyword: 'google custom search engine api python'

[+] Title: How to Use Google Custom Search Engine API in Python - Python ...

[+] Snippet: 10 results ... Learning how to create your own Google Custom Search Engine and use its

Application Programming Interface (API) in Python.

[+] URL: https://www.thepythoncode.com/article/use-google-custom-search-engine-api-in-pythonAwesome, this website ranks the third for that keyword, here is another example run:

[*] Going for page: 1

[*] Going for page: 2

[+] thepythoncode.com is found on rank #13 for keyword: 'make a bitly url shortener in python'

[+] Title: How to Make a URL Shortener in Python - Python Code

[+] Snippet: Learn how to use Bitly and Cuttly APIs to shorten long URLs programmatically

using requests library in Python.

[+] URL: https://www.thepythoncode.com/article/make-url-shortener-in-pythonThis time it went to the 2nd page, as it didn't find it in the first page. As mentioned earlier, it will go all the way to page 10 and stop.

Conclusion

Alright, there you have the script, I encourage you to add up to it and customize it. For example, make it accept multiple keywords for your site and make custom alerts to notify you whenever a position is changed (went down or up), good luck!

Please check the full code of this tutorial here.

Learn also: How to Make a URL Shortener in Python.

Happy Coding ♥

Just finished the article? Now, boost your next project with our Python Code Generator. Discover a faster, smarter way to code.

View Full Code Improve My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!