Step up your coding game with AI-powered Code Explainer. Get insights like never before!

Recently, wide attention has grown in the field of computer vision, especially in face recognition, detection, and facial landmark localization. Many significant features can be directly derived from the human face, such as age, gender, and emotions.

Age estimation can be defined as the automatic process of classifying the facial image into the exact age or to a specific age range. Basically, age estimation from the face is still a challenging problem, and guessing an exact age from a single image is very difficult due to factors like makeup, lighting, obstructions, and facial expressions.

Inspired by many ubiquitous applications spread across multiple channels like "AgeBot" on Android, "Age Calculator" on iPhone, we will build a simple Age estimator using OpenCV in Python.

The primary goal of this tutorial is to develop a lightweight command-line-based utility, through Python-based modules and it is intended to describe the steps to automatically detect faces in a static image and to predict the age of the spotted persons using a deep learning-based age detection model.

Please note that if you want to detect both age and gender at the same time, check this tutorial for it.

Learn also: Gender Detection using OpenCV in Python.

The following components come into play:

- OpenCV: is an open-source library for computer vision, machine learning, and image processing. OpenCV supports a wide variety of programming languages like Python, C++, and Java, and it is used for all sorts of image and video analysis like facial detection and recognition, photo editing, optical character recognition, and a whole heap more. Using OpenCV comes with many benefits, among which:

- OpenCV is an open-source library and it is free of cost.

- OpenCV is fast since it is written in C/C++.

- OpenCV supports most Operating Systems such as Windows, Linux, and macOS.

Suggestion: Check our computer vision tutorials for more OpenCV use cases.

- filetype: is a small and dependency-free Python package to infer file and MIME types.

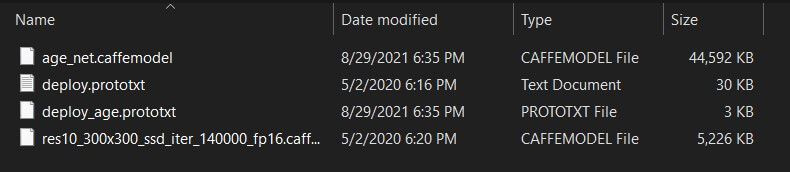

For the purpose of this article, we will use pre-trained Caffe models, one for face detection taken from the face detection tutorial, and another model for age detection. Below is the list of necessary files to include in our project directory:

age_net.caffemodel: It is the pre-trained model weights for age detection. You can download it here.deploy_age.prototxt: is the model architecture for the age detection model (a plain text file with a JSON-like structure containing all the neural network layer’s definitions). Get it here.res10_300x300_ssd_iter_140000_fp16.caffemodel: The pre-trained model weights for face detection, download here.deploy.prototxt.txt: This is the model architecture for the face detection model, download here.

After downloading the 4 necessary files, put them in a folder and call it "weights":

To get started, let's install OpenCV and NumPy:

$ pip install opencv-python numpyOpen up a new Python file:

# Import Libraries

import cv2

import os

import filetype

import numpy as np

# The model architecture

# download from: https://drive.google.com/open?id=1kiusFljZc9QfcIYdU2s7xrtWHTraHwmW

AGE_MODEL = 'weights/deploy_age.prototxt'

# The model pre-trained weights

# download from: https://drive.google.com/open?id=1kWv0AjxGSN0g31OeJa02eBGM0R_jcjIl

AGE_PROTO = 'weights/age_net.caffemodel'

# Each Caffe Model impose the shape of the input image also image preprocessing is required like mean

# substraction to eliminate the effect of illunination changes

MODEL_MEAN_VALUES = (78.4263377603, 87.7689143744, 114.895847746)

# Represent the 8 age classes of this CNN probability layer

AGE_INTERVALS = ['(0, 2)', '(4, 6)', '(8, 12)', '(15, 20)',

'(25, 32)', '(38, 43)', '(48, 53)', '(60, 100)']

# download from: https://raw.githubusercontent.com/opencv/opencv/master/samples/dnn/face_detector/deploy.prototxt

FACE_PROTO = "weights/deploy.prototxt.txt"

# download from: https://raw.githubusercontent.com/opencv/opencv_3rdparty/dnn_samples_face_detector_20180205_fp16/res10_300x300_ssd_iter_140000_fp16.caffemodel

FACE_MODEL = "weights/res10_300x300_ssd_iter_140000_fp16.caffemodel"

# Initialize frame size

frame_width = 1280

frame_height = 720

# load face Caffe model

face_net = cv2.dnn.readNetFromCaffe(FACE_PROTO, FACE_MODEL)

# Load age prediction model

age_net = cv2.dnn.readNetFromCaffe(AGE_MODEL, AGE_PROTO)Here we initialized the paths of our models' weights and architecture, the image size we gonna resize to, and finally loading the models.

The variable AGE_INTERVALS is a list of the age classes of the age detection model.

Next, let's make a function that takes an image as input, and returns a list of detected faces:

def get_faces(frame, confidence_threshold=0.5):

"""Returns the box coordinates of all detected faces"""

# convert the frame into a blob to be ready for NN input

blob = cv2.dnn.blobFromImage(frame, 1.0, (300, 300), (104, 177.0, 123.0))

# set the image as input to the NN

face_net.setInput(blob)

# perform inference and get predictions

output = np.squeeze(face_net.forward())

# initialize the result list

faces = []

# Loop over the faces detected

for i in range(output.shape[0]):

confidence = output[i, 2]

if confidence > confidence_threshold:

box = output[i, 3:7] * np.array([frame_width, frame_height, frame_width, frame_height])

# convert to integers

start_x, start_y, end_x, end_y = box.astype(np.int)

# widen the box a little

start_x, start_y, end_x, end_y = start_x - \

10, start_y - 10, end_x + 10, end_y + 10

start_x = 0 if start_x < 0 else start_x

start_y = 0 if start_y < 0 else start_y

end_x = 0 if end_x < 0 else end_x

end_y = 0 if end_y < 0 else end_y

# append to our list

faces.append((start_x, start_y, end_x, end_y))

return facesMost of the code was grabbed from the face detection tutorial, check it out for more information on how it's done.

Let's make a utility function that displays a given image:

def display_img(title, img):

"""Displays an image on screen and maintains the output until the user presses a key"""

# Display Image on screen

cv2.imshow(title, img)

# Mantain output until user presses a key

cv2.waitKey(0)

# Destroy windows when user presses a key

cv2.destroyAllWindows()Next, below are two utility functions, one for finding the appropriate font size when printing text to the image, and another for dynamically resizing an image:

def get_optimal_font_scale(text, width):

"""Determine the optimal font scale based on the hosting frame width"""

for scale in reversed(range(0, 60, 1)):

textSize = cv2.getTextSize(text, fontFace=cv2.FONT_HERSHEY_DUPLEX, fontScale=scale/10, thickness=1)

new_width = textSize[0][0]

if (new_width <= width):

return scale/10

return 1

# from: https://stackoverflow.com/questions/44650888/resize-an-image-without-distortion-opencv

def image_resize(image, width = None, height = None, inter = cv2.INTER_AREA):

# initialize the dimensions of the image to be resized and

# grab the image size

dim = None

(h, w) = image.shape[:2]

# if both the width and height are None, then return the

# original image

if width is None and height is None:

return image

# check to see if the width is None

if width is None:

# calculate the ratio of the height and construct the

# dimensions

r = height / float(h)

dim = (int(w * r), height)

# otherwise, the height is None

else:

# calculate the ratio of the width and construct the

# dimensions

r = width / float(w)

dim = (width, int(h * r))

# resize the image

return cv2.resize(image, dim, interpolation = inter)Mastering YOLO: Build an Automatic Number Plate Recognition System

Building a real-time automatic number plate recognition system using YOLO and OpenCV library in Python

Download EBookNow we know how to detect faces, the below function is responsible for predicting the age for every face detected:

def predict_age(input_path: str):

"""Predict the age of the faces showing in the image"""

# Read Input Image

img = cv2.imread(input_path)

# Take a copy of the initial image and resize it

frame = img.copy()

if frame.shape[1] > frame_width:

frame = image_resize(frame, width=frame_width)

faces = get_faces(frame)

for i, (start_x, start_y, end_x, end_y) in enumerate(faces):

face_img = frame[start_y: end_y, start_x: end_x]

# image --> Input image to preprocess before passing it through our dnn for classification.

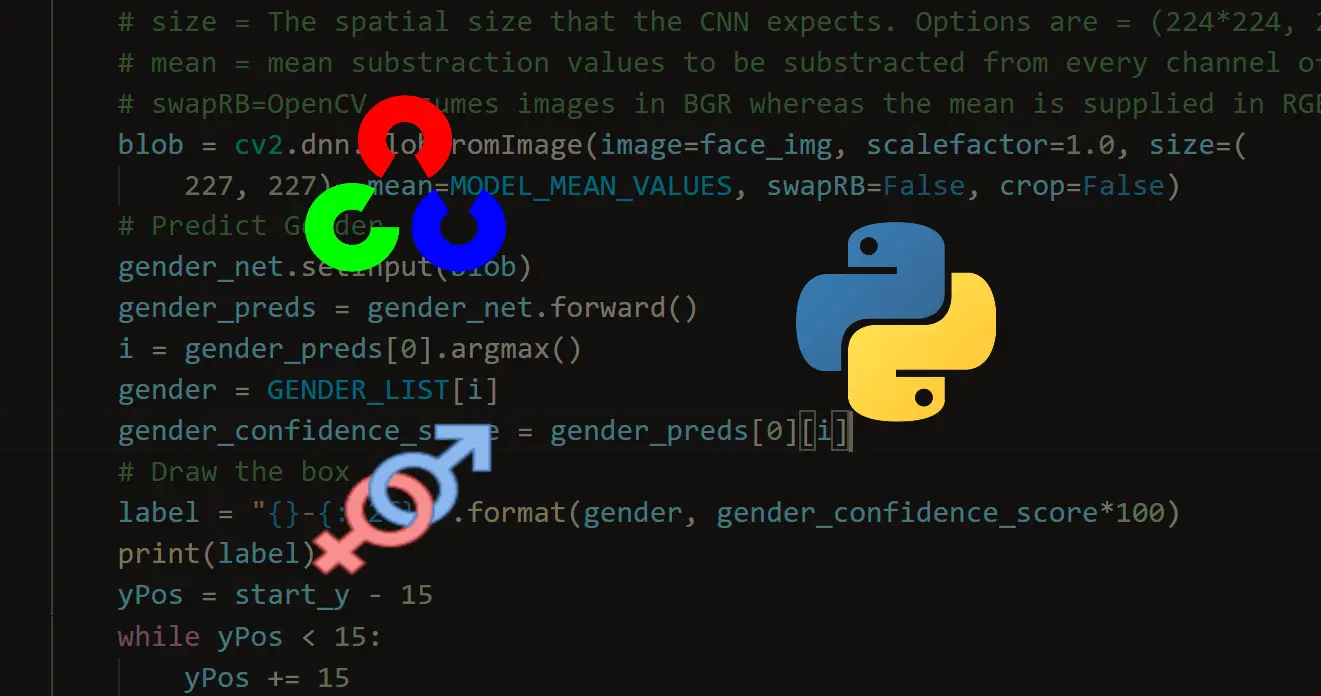

blob = cv2.dnn.blobFromImage(

image=face_img, scalefactor=1.0, size=(227, 227),

mean=MODEL_MEAN_VALUES, swapRB=False

)

# Predict Age

age_net.setInput(blob)

age_preds = age_net.forward()

print("="*30, f"Face {i+1} Prediction Probabilities", "="*30)

for i in range(age_preds[0].shape[0]):

print(f"{AGE_INTERVALS[i]}: {age_preds[0, i]*100:.2f}%")

i = age_preds[0].argmax()

age = AGE_INTERVALS[i]

age_confidence_score = age_preds[0][i]

# Draw the box

label = f"Age:{age} - {age_confidence_score*100:.2f}%"

print(label)

# get the position where to put the text

yPos = start_y - 15

while yPos < 15:

yPos += 15

# write the text into the frame

cv2.putText(frame, label, (start_x, yPos),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), thickness=2)

# draw the rectangle around the face

cv2.rectangle(frame, (start_x, start_y), (end_x, end_y), color=(255, 0, 0), thickness=2)

# Display processed image

display_img('Age Estimator', frame)

# save the image if you want

# cv2.imwrite("predicted_age.jpg", frame)Here is the complete process of the above function:

- We read the image using the

cv2.imread()method. - After the image is resized to the appropriate size, we use our

get_faces()function to get all detected faces. - We iterate on each face image, we set it as input to the age prediction model to perform age prediction.

- We print the probabilities of each class, as well as the dominant one.

- A rectangle and text containing age are drawn on the image.

- Finally, we show the final image.

You can always uncomment the cv2.imwrite() line to save the new image.

Now let's write our main code:

if __name__ == '__main__':

# Parsing command line arguments entered by user

import sys

image_path = sys.argv[1]

predict_age(image_path)We simply use Python's built-in sys module for getting user input, as we only need one argument from the user and that's the image path, the argparse module would be overkill.

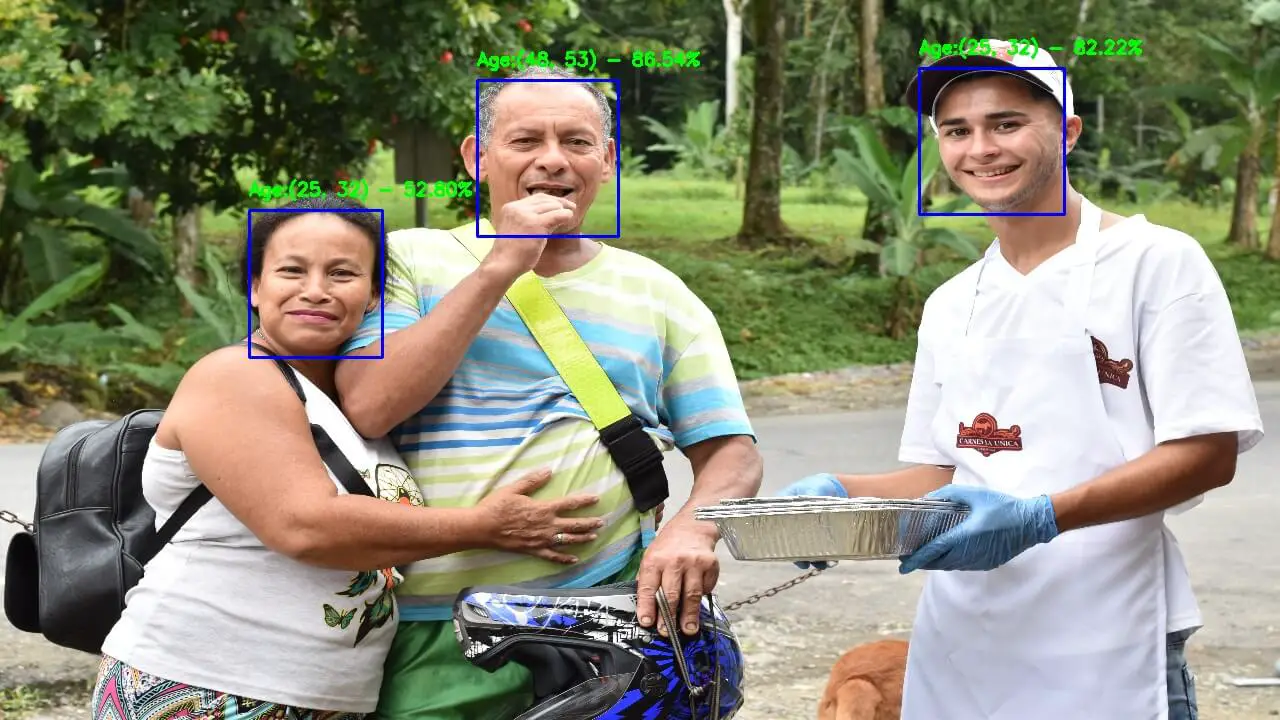

Let's test the code on this stock photo:

$ python predict_age.py 3-people.jpgOutput:

============================== Face 1 Prediction Probabilities ==============================

(0, 2): 0.00%

(4, 6): 0.00%

(8, 12): 31.59%

(15, 20): 0.52%

(25, 32): 52.80%

(38, 43): 14.89%

(48, 53): 0.18%

(60, 100): 0.01%

Age:(25, 32) - 52.80%

============================== Face 2 Prediction Probabilities ==============================

(0, 2): 0.00%

(4, 6): 0.05%

(8, 12): 17.63%

(15, 20): 0.02%

(25, 32): 82.22%

(38, 43): 0.06%

(48, 53): 0.01%

(60, 100): 0.00%

Age:(25, 32) - 82.22%

============================== Face 3 Prediction Probabilities ==============================

(0, 2): 0.05%

(4, 6): 0.03%

(8, 12): 0.07%

(15, 20): 0.00%

(25, 32): 1.05%

(38, 43): 0.96%

(48, 53): 86.54%

(60, 100): 11.31%

Age:(48, 53) - 86.54%And this is the resulting image:

Wrap-up

The age detection model is heavily biased toward the age group [25-32]. Therefore, you may pinpoint this discrepancy while testing this utility.

You can always tweak some parameters to make the model more accurate. For instance, in the get_faces() function, I've widened the box by 10 pixels on all sides; you can always change that to any value you feel good about. Changing frame_width and frame_height is also a way to refine the accuracy of the prediction.

If you want to use this model on your webcam, I made this code just for it.

Check this page for the full code version.

Learn also: How to Blur Faces in Images using OpenCV in Python.

Happy coding ♥

Want to code smarter? Our Python Code Assistant is waiting to help you. Try it now!

View Full Code Understand My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!